Issue #64 - Neural Machine Translation with Byte-Level Subwords

13 Dec 2019

Introduction

In order to limit vocabulary, most neural machine translation engines are based on subwords. In some settings, character-based systems are even better (see issue #60). However, rare characters in noisy data or character-based languages can unnecessarily take up vocabulary slots and limit its compactness. In this post we take a look at an alternative, proposed by Wang et al. (2019), in which subwords are trained directly from bytes instead of characters.

Byte-Level Text Representation

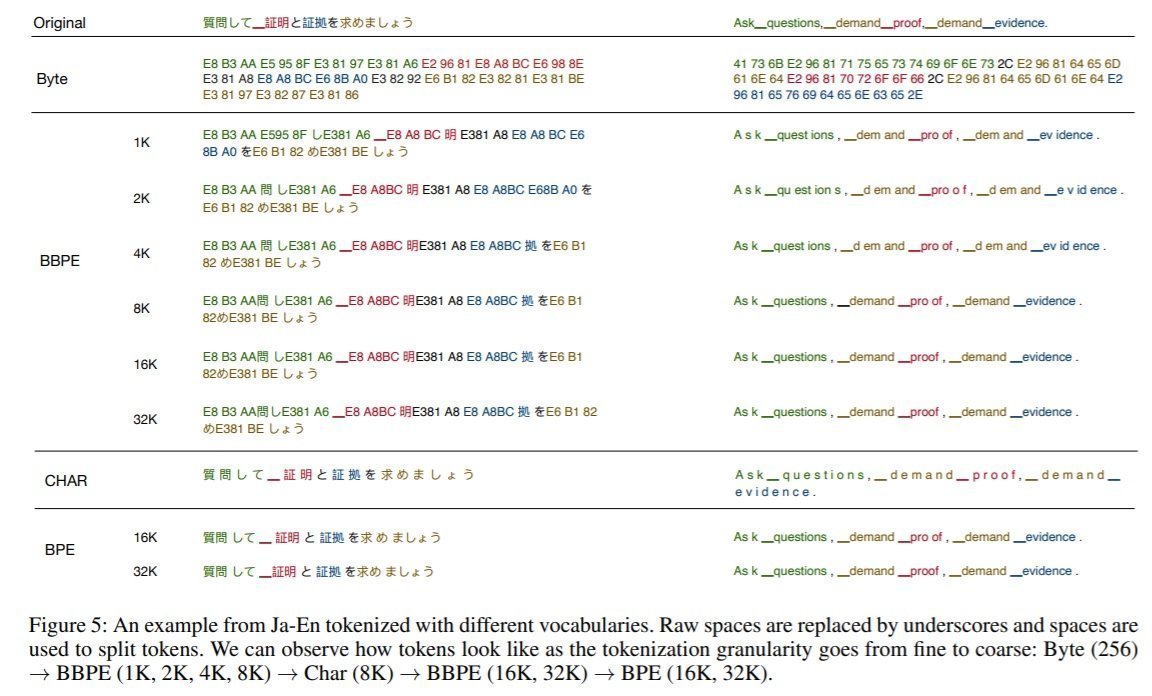

In UTF-8 encoding, each character is encoded into 1 to 4 bytes. This allows us to model a sentence as a sequence of bytes instead of characters. While there are 138,000 unicode characters, a sentence can be represented as a sequence of UTF-8 bytes (248 out of 256 possible bytes). A representation of text based on bytes is up to 4 times longer than a representation based on characters, thus it is computationally more expensive. As an alternative, Wang et al. segment a byte sequence into byte-level subwords, that is byte n-grams. They do so with the Byte Pair Encoding (BPE) algorithm, which extracts the k most frequent n-grams, k being the resulting vocabulary size. The following figure gives examples of decomposition into Bytes, BBPE subwords, characters (CHAR) or BPE subwords. Byte-level BPE (BBPE) symbols can be partial characters or a combination of complete and partial characters. This has two consequences. Firstly, it introduces ambiguity to determine the character boundaries. The authors add a module to incorporate a larger context surrounding each symbol for disambiguation and learning the character boundaries. Secondly, a sequence of BBPE symbols can be an invalid sentence. During decoding, a dynamic programming strategy is adopted to ensure that only BBPE sequences corresponding to valid character sequences are output.

Byte-level BPE (BBPE) symbols can be partial characters or a combination of complete and partial characters. This has two consequences. Firstly, it introduces ambiguity to determine the character boundaries. The authors add a module to incorporate a larger context surrounding each symbol for disambiguation and learning the character boundaries. Secondly, a sequence of BBPE symbols can be an invalid sentence. During decoding, a dynamic programming strategy is adopted to ensure that only BBPE sequences corresponding to valid character sequences are output.