Introduction

In order to limit vocabulary, most neural machine translation engines are based on subwords. In some settings, character-based systems are even better (see issue #60). However, rare characters in noisy data or character-based languages can unnecessarily take up vocabulary slots and limit its compactness. In this post we take a look at an alternative, proposed by Wang et al. (2019), in which subwords are trained directly from bytes instead of characters.

Byte-Level Text Representation

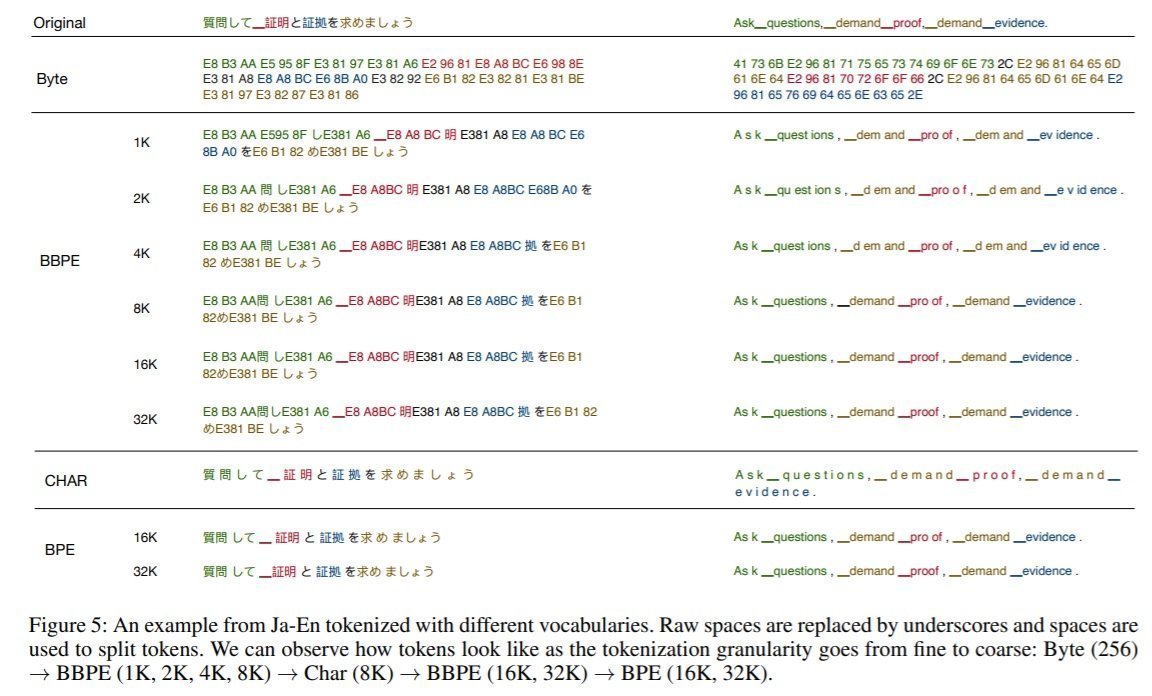

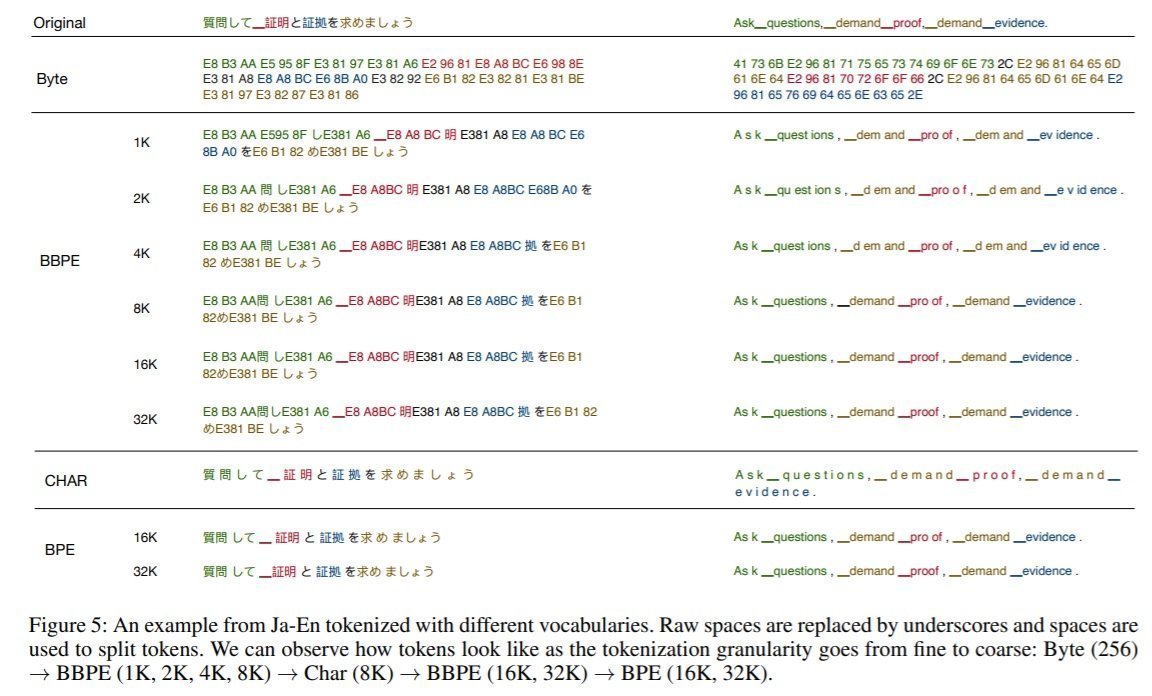

In UTF-8 encoding, each character is encoded into 1 to 4 bytes. This allows us to model a sentence as a sequence of bytes instead of characters. While there are 138,000 unicode characters, a sentence can be represented as a sequence of UTF-8 bytes (248 out of 256 possible bytes). A representation of text based on bytes is up to 4 times longer than a representation based on characters, thus it is computationally more expensive. As an alternative, Wang et al. segment a byte sequence into byte-level subwords, that is byte n-grams. They do so with the Byte Pair Encoding (BPE) algorithm, which extracts the k most frequent n-grams, k being the resulting vocabulary size. The following figure gives examples of decomposition into Bytes, BBPE subwords, characters (CHAR) or BPE subwords.  Byte-level BPE (BBPE) symbols can be partial characters or a combination of complete and partial characters. This has two consequences. Firstly, it introduces ambiguity to determine the character boundaries. The authors add a module to incorporate a larger context surrounding each symbol for disambiguation and learning the character boundaries. Secondly, a sequence of BBPE symbols can be an invalid sentence. During decoding, a dynamic programming strategy is adopted to ensure that only BBPE sequences corresponding to valid character sequences are output.

Byte-level BPE (BBPE) symbols can be partial characters or a combination of complete and partial characters. This has two consequences. Firstly, it introduces ambiguity to determine the character boundaries. The authors add a module to incorporate a larger context surrounding each symbol for disambiguation and learning the character boundaries. Secondly, a sequence of BBPE symbols can be an invalid sentence. During decoding, a dynamic programming strategy is adopted to ensure that only BBPE sequences corresponding to valid character sequences are output.

Qualitative comparison with BPE

BBPE offers the flexibility of decomposing rare characters into byte n-grams from the vocabulary instead of including them directly. This frees vocabulary slots for other frequent symbols. Thus BBPE symbols are more evenly distributed than BPE ones. The 15-20% of least frequent BPE symbols are namely very rare, which does not occur with BBPE. In a Japanese or a multilingual source, the percentage of BBPE tokens with partial characters is 30 to 50%, while in German or English it lies around 5%.

Noisy Character Sets

The authors experiment on a noisy English-German training corpus, in which there are 3,400 different characters, while both languages have less than 30 characters. Since BPE includes all characters, those rare characters will waste quite a lot of BPE vocabulary slots, which does not happen with BBPE. They obtain similar BLEU scores with a 2,000 or 4,000 BBPE vocabulary as with a 16,000 or 32,000 BPE vocabulary, although the BBPE-based models are 15% more compact.

Character-Rich Languages

Wang et al. perform an experiment on a Japanese-English dataset which has a set of 7.9K Japanese characters, where 99.99% of tokens are covered by the top 2.4K characters. They thus train a neural MT engine with a 4,000 BBPE vocabulary, and find that BBPE is comparable to BPE or slightly outperforms BPE in terms of BLEU score depending on the model size (BLEu score is 19.6 for BBPE instead of 19.1 for BPE).

Multilingual NMT

The authors report an experiment with a many-to-English dataset containing 58 languages (parallelly to English) and 10.8K characters from different writing systems. The characters between languages are not necessarily shared, but the byte n-grams are. Thus in a multilingual NMT context, using byte-level subwords maximizes vocabulary sharing. This yields some improvement in BLEU score for BBPE-based models with respect to BPE-based models (BLEU score is 29.9 for BBPE instead of 29.6 for BPE).

In summary

When dealing with noisy character sets, character-based languages or multilingual neural MT, byte-level BPE symbols are a useful alternative to BPE symbols. BBPE-based models may perform better and are more compact than the BPE-based ones. However, BBPE sequences are slightly longer than BPE ones, which slows down training and inference. In the settings in which character-based models outperform BPE-based models, BBPE-based models may perform similarly to character-based ones, but at a higher efficiency.

Byte-level BPE (BBPE) symbols can be partial characters or a combination of complete and partial characters. This has two consequences. Firstly, it introduces ambiguity to determine the character boundaries. The authors add a module to incorporate a larger context surrounding each symbol for disambiguation and learning the character boundaries. Secondly, a sequence of BBPE symbols can be an invalid sentence. During decoding, a dynamic programming strategy is adopted to ensure that only BBPE sequences corresponding to valid character sequences are output.

Byte-level BPE (BBPE) symbols can be partial characters or a combination of complete and partial characters. This has two consequences. Firstly, it introduces ambiguity to determine the character boundaries. The authors add a module to incorporate a larger context surrounding each symbol for disambiguation and learning the character boundaries. Secondly, a sequence of BBPE symbols can be an invalid sentence. During decoding, a dynamic programming strategy is adopted to ensure that only BBPE sequences corresponding to valid character sequences are output.