Issue #75 - Echo State Neural Machine Translation

Introduction

In general, Neural MT weights are randomly initialized and then trained using parallel corpus. In this post, we will discuss a Neural MT architecture proposed by Garg et al., (2020), which is based on echo state network (ESN), named echo state neural machine translation (ESNMT). In ESNMT, the encoder-decoder weights of the model are randomly generated and fixed throughout the training process.

Echo State Neural MT

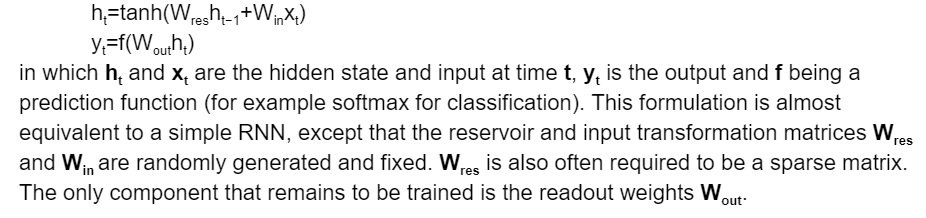

ESN (Jaeger, 2010) is a special type of recurrent neural network (RNN), in which the recurrent matrix (known as “reservoir”) and input transformation are randomly generated, then fixed, and the only trainable component is the output layer (known as “readout”). A very basic version of ESN has the following formulation:

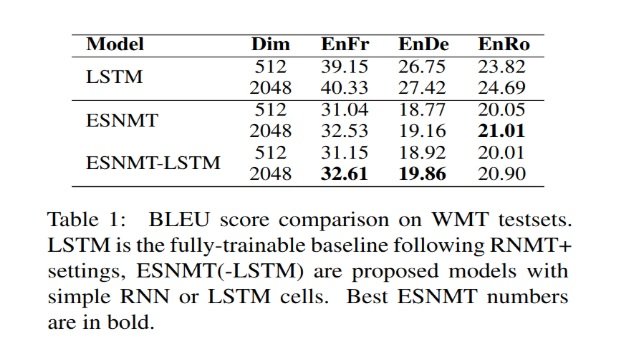

Training: The model parameters are trained using gradient descent as usual. Note that since the recurrent layer weights are fixed and their gradient is not calculated, the problem of exploding/vanishing gradient in RNN is elevated. Therefore, we expect no significant difference in quality between ESNMT and ESNMT-LSTM.

Model Compression: Since randomized components of ESNMT can be deterministically generated with a fixed random seed to store the model offline, we only need to store the seed with the remaining trainable parameters.