Issue #96 - Using Annotations for Machine Translating Named Entities

Introduction

Getting the translation of named entities right is not a trivial task and Machine Translation (MT) has traditionally struggled with it. If a named entity is wrongly translated, the human eye will quickly spot it, and more often than not, those mistranslations will make people burst into laughter as machines can, seemingly, be very creative.

To a certain extent, named entities could be treated in MT the same way that we treat Terminology, another tricky field which we have tackled before in issues #7 (Terminology in Neural MT (NMT)) and #79 (Merging Terminology into Neural Machine Translation) of our blog series. In fact, in issue #79, one of the areas tackled in Wang et al. (2019) is precisely the translation of named entities.

In today’s issue we look into the work done by Modrzejewski et al. (2020), who explore whether incorporating external annotations improves the translation of named entities in NMT. Their approach consists of using a named entity recognition (NER) system to annotate the data prior to training an encoder-decoder Transformer model. They focus on three main types of named entity: organization, location and person and also explore the impact of the granularity of the named entity class annotations in translation quality.

Experiments

Modrzejewski et al. (2020) run experiments in two language pairs: English→German and English→Chinese. They follow the work done by Sennrich and Haddow (2016) to incorporate the named entities' information as additional parallel streams (source factors). This means that the additional information is only applied to the source text and at the word level in the form of either added or concatenated word embeddings.

To run their NMT experiments they take the data from the WMT2019 news translation task and reduce the training set for the Chinese engine slightly to run comparable experiments in terms of size of the corpora used.

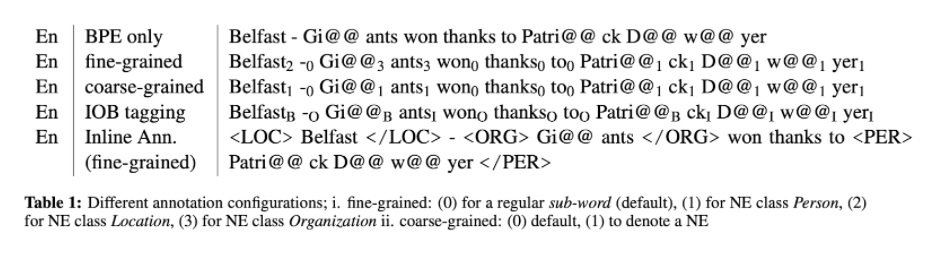

Prior to training their NMT systems, they run the NER system included in spaCy to identify and annotate the named entities on the source text. Next, they perform a joint source and target byte-pair encoding (BPE) for English→ German and disjoint for English→Chinese. They generate two files with source factors: one marking named entities (either the coarse-grained or the fine-grained case), and one with inside-outside-beginning (IOB) tagging, whereby (B) indicates the beginning, (I) the inside and (O) the outside of a named entity. The image below, taken from their paper, shows the different configurations:  Their experiments include two baseline models and 5 different setups:

Their experiments include two baseline models and 5 different setups:

- The baseline model, where nothing was done;

- A second baseline using the inline annotation method with XML boundary tags proposed by Li et al. (2018);

- A model adding to the word embeddings the fine-grained annotations as a first source factor;

- A model concatenating to the word embeddings the fine-grained annotations as a first source factor and using the IOB tagging as a second source factor;

- A model adding to the word embeddings the fine-grained annotations as a first source factor and using the IOB tagging as a second source factor;

- A model concatenating to the word embeddings the coarse-grained annotations as a first source factor and using the IOB tagging as a second source factor;

- A model adding to the word embeddings the coarse-grained annotations as a first source factor and using the IOB tagging as a second source factor.

Results

The authors carry out several types of evaluation, starting with the de facto one for Machine Translation quality evaluation: computing the automatic evaluation metric BLEU. Their evaluation results show that almost all models perform better. However, improvements are also not impressive: up to a point in the case of the German engine, and just 0.16 points in the case of the Chinese one.

As the authors also duly acknowledge, using an automatic MT evaluation metric to measure the impact of annotating named entities is just an indirect evaluation, as we don’t know if the named entities were translated correctly or not. To that end, they perform an evaluation of the named entities only on 300 random sentences (100 per type of named entity type identified on the English side of the corpus).

They first ran an automatic in-depth analysis of the named entities appearing in their new test set. To do so they annotated the reference translations with spaCy in the case of the German models, and with Standford NER in the case of the Chinese models, and then checked whether the annotated named entities appeared in the translations of each model. Instead of measuring precision and recall or the F1-measure they use a named entity match formula that divides all hits (named entities appearing in both the reference and the MT output) by the sum of all hits and misses (named entities appearing in the reference but not in the source).

Their results show up to 72.39% hits in the case of German (when measuring for all categories), and 27.96% in the case of Chinese. The authors hypothesize that this rather low result in the case of Chinese could be due to transliterations. Interestingly, the engine supposedly getting the named entities right for German was obtaining better BLEU scores than the baseline, but was not the best scoring one. In the case of Chinese, the best engine coincides. What is confirmed is that in almost all cases, augmenting source sentences with named entity information leads to their improved translation. It also seems that IOB tagging is an important piece of information for the NMT systems, as the models including it obtain better overall results. Finally, their results contradict the findings by Li et al. (2018), as in their case inline annotation does not deliver promising results. In fact for the German engine the results are significantly lower, and in the case of Chinese insignificantly higher.

Finally, a human evaluation was additionally performed to validate the results obtained using NER tools without assessing their accuracy. In this case, a human annotator marked as a hit or miss the named entities and counted as valid the named entities whose form in the MT output was different from the one in the reference, but still correct. Interestingly, the results show a higher hit/miss rate due to the fact that the source text contained false positives (strings identified as named entities that in reality are not), and the translations also included false negatives (there was not an exact match, but actually it was a valid translation).

In summary

It seems that using source factors and IOB tagging to annotate named entities and their classes is a promising approach to improve the quality of named entity translations in NMT. The authors also claim that source factors guide the annotated models better and prevent over-translation.

However, before implementing this approach into commercial environments, testing the accuracy of the NER tool to be used is paramount, as it will greatly influence the overall quality of the translations. The same way that clean data is key to training NMT models, good annotations also need to be ensured. If this is not the case, the infamous expression garbage in, garbage out would become true and what started as a promising path towards tackling a difficult challenge may become a case of being lost in translation.