Issue #150 - Automated Question Generation

Overview

An objective of many NLP systems is to help people efficiently understand textual content. This can be seen in applications that try to automatically extract key points that capture what a document is about. One way to represent the ‘key points’ of a document is in the format of question-and-answer pairs. Structuring information in this way can be helpful: consider how often people consult the “Frequently Asked Questions” section of an information source. Now imagine an NLP system that could automatically generate something akin to an FAQ list for any given text. This is the ambition we pursued in our paper “AnswerQuest: A System for Generating Question-Answer Items from Multi-Paragraph Documents” (Roemmele et al., 2021), which I’ll describe in this article.

Developing this capability required us to delve into an intriguing area of NLP research: question generation (QG). QG systems strive to simulate people’s ability to obtain meaningful information, like asking the questions you’d find in a FAQ section. It may seem like QG should be a relatively easy task, since a question is just a short text that requests information. But in reality, QG shares a lot of the same challenges associated with other language generation tasks like dialogue production, summarization, and machine translation. This is because QG models need a fair amount of knowledge in the first place in order to ask meaningful questions. I’ll illustrate this by discussing our own experiments with QG.

Setting a Standard

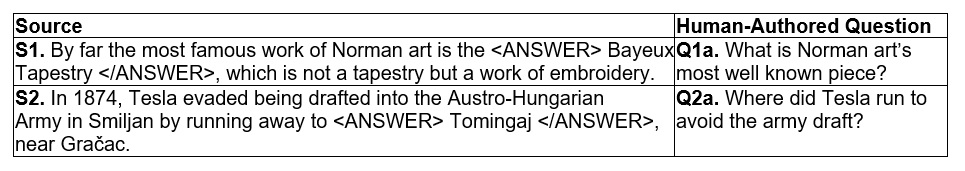

For our first attempt at QG, we trained a neural Transformer sequence-to-sequence model to generate questions. We refer to this model as StandardQG. This is actually the same type of model frequently used for machine translation and other tasks involving aligned source-target text pairs. In this case, instead of observing the translation of a text from a source language into a target language, StandardQG observed a text passage and a question as the source and target, respectively. We used passage-question pairs from the Stanford Question Answering Dataset (SQuAD) released by Rajpurkar et al. (2016). This dataset consists of human-authored questions about Wikipedia articles, where the answers to the questions are annotated within the article. In particular, the training pairs looked like the following examples.

By training StandardQG on these pairs, it learned to generate the target questions that were answered by the tokens inside the tags in the source text. When this model is deployed to generate questions for any given text, these answer annotations can be inserted around any phrase in order to cue the model to produce a question relevant to that phrase.

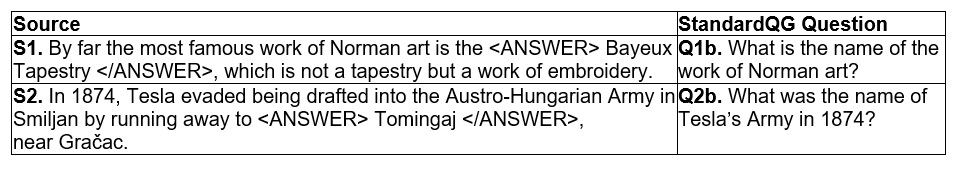

While StandardQG could produce fluent questions in many cases, there were two general problems, revealed by the examples below.

In example Q1b, the question is vague, since presumably there are many works of Norman art and it’s not clear without looking at the source which one the question refers to. In example Q2b, the question is misleading since it incorrectly implies Tesla had his own army, and thus there is no valid answer. These instances likely result from the model over-applying generic lexical patterns that appear across many questions in the dataset (e.g. “What is the name of X?”), rather than sufficiently attuning to the context of the source text. This lack of context sensitivity can particularly lead to the factual invalidity shown in Q2b. Neural models are prone to this problem across many different language generation tasks.

Simulating Linguistic Rules

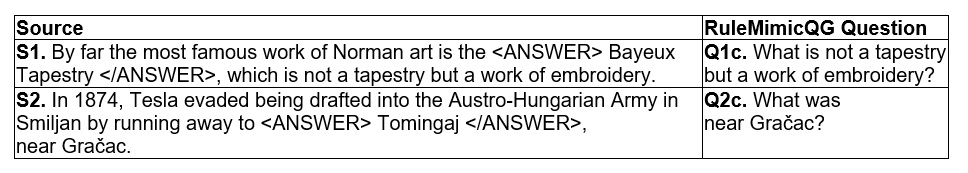

Interestingly, many of the generation models used more commonly 10-20 years ago are less vulnerable to the factuality problem. This is because these models make use of deterministic rule-based templates to map a source text to its target, instead of statistically inferring this mapping. For QG, this rule-based approach involves lexical and syntactic transformations like re-ordering and inserting tokens into a sentence with an ablated phrase in order to convert it into a question answered by that phrase. For instance, the question “Where did Tesla run away to?” can be derived from S2 in the above examples through operations like replacing the phrase “Tomingaj” with the question word “where”, inserting the auxiliary verb “did”, and reversing syntactic order so that the subject “Tesla” follows the verb. Consequently, these models more often preserve the text in the source. We wanted to find a way to encourage our own model to do this better. To explore this, we used the rule-based system by Heilman & Smith (2010) to derive a large set of questions from the SQuAD source passages. We then trained a new neural Transformer sequence-to-sequence model, which we term RuleMimicQG, on these rule-derived questions. We intuitively expected the model to learn to mimic the rule-based transformations by observing their input and output. RuleMimicQG ultimately produced questions like the following examples.

Compared with the StandardQG questions, these questions seem more anchored to the source text, and they avoid inserting out-of-place semantic words. The drawback is that the RuleMimicQG questions often lack substance compared to human-authored questions. They suggest the model is merely shallowly extracting information without judging its overall relevance to the source text. Example Q1c, for instance, does not establish the relevance of mentioning that the work is not a tapestry. Example Q2c asks about the relation between Gračac and Tomingaj but the significance of this relation (that Tesla traveled to this area to evade the draft) is not clear.

Simulating Humanness

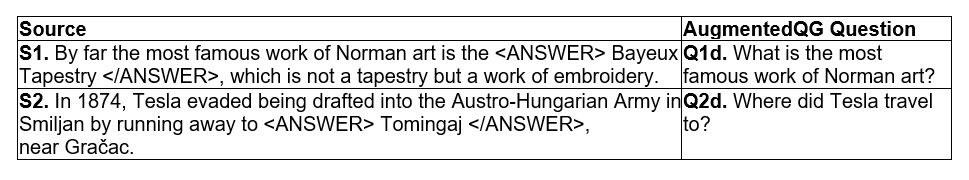

We wanted our model to capture the specificity and faithfulness of the RuleMimicQG questions while also producing questions that people find meaningful. Thus, we took RuleMimicQG and additionally trained it on the human-authored questions observed by StandardQG. We hoped that having RuleMimicQG as an anchor would help keep the model from generating overly generic questions, as we observed initially with StandardQG. We refer to this resulting model as AugmentedQG. Below are some questions generated by AugmentedQG.

These examples appear more attuned to their source passages than the StandardQG questions and also more substantial than the RuleMimicQG questions. There are still traces of problems in the AugmentedQG questions. In particular, example Q2d should ideally include more context provided by the source text in order to clarify the relevance of Tesla’s travel. The above human-authored question for the same source (Q2a) does give this context: Where did Tesla run to avoid the army draft?

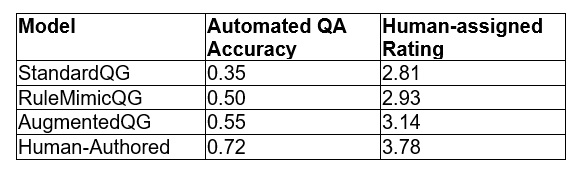

Still, the advantage of AugmentedQG over the other models was confirmed by two quantitative evaluations. In the first, we gave the questions generated by each of our models along with the passages containing their answers to an automated question answering (QA) system. The intuition behind this evaluation is that the more clear a question is, the more likely a QA system can correctly find the answer to the question in the provided passage. In the second evaluation, we had people rate (on a 1-5 scale) the comprehensibility of questions generated by each model. The table below shows the results of both of these evaluations, with the scores of the human-authored questions included in the comparison.

As shown, 55% of the questions generated by AugmentedQG were answered correctly by the automated QA system, compared with 50% of the RuleMimicQG questions and 35% of the StandardQG questions. The AugmentedQG questions were still less clear than the questions written by humans, where 72% were answered correctly by the QA system. Accordingly, the AugmentedQG questions were rated as more comprehensible (an average rating of 3.14) than the RuleMimicQG questions (2.93) and the StandardQG questions (2.81). This rating was still much lower than that of the human-authored questions (3.78).

In summary

Overall, these results indicate that when training a neural QG model on human-authored examples, it is beneficial to have the model first observe artificially generated examples that precisely simulate linguistic rules. The StandardQG outcome suggests these rules are harder for a model to infer directly from a fixed set of human-authored questions. However, observing the rules alone doesn’t tell a model what knowledge people emphasize when asking questions, as the RuleMimicQG results show. A model is most successful when it learns from both rules and human behavior, as done by AugmentedQG. This finding could possibly generalize to neural models for other generation tasks. Accordingly, the field of NLP is currently exploring how to fuse traditional formal approaches with modern neural ones in order to achieve the best of both worlds.

The beginning of this article explained how we have integrated QG in an application to convey important content in a document. There are other exciting use cases of QG, such as an interactive writing aid that asks authors questions to clarify or elaborate on what they have already written. An application like this would especially push QG models to anticipate what information people find important and meaningful to discover in a text, which currently remains a challenge for this research.

- test

- test

- test