Issue #163 - Evaluating Terminology Consistency for Machine Translation

Introduction

Despite the high quality reached nowadays by neural machine translation (NMT), its output is often still not adequate for domain-specific scenarios. Today, high-quality domain-specific NMT systems are in great demand and one of the challenges in building a good quality domain-specific NMT system is getting the terminology right. We have multiple automatic evaluation metrics to assess the general quality of the MT output, but these don’t explicitly address the terminology constraints. In today’s blog post, we will take a look at the work of Alam et al. (2021) who propose metrics to evaluate the consistency of MT output with regards to terminology.

Proposed Metrics

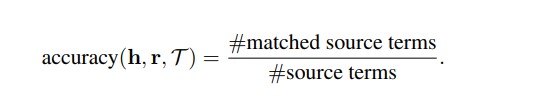

- Exact-Match Accuracy: A simple metric that computes the standard aggregate accuracy over each term and if its corresponding terminology-defined translation appears in the output.

This metric does have limitations, it doesn’t take the position of the target-side term into account and can produce a very high score even when the source term translations are appended at the beginning or end of output translation. - Window Overlap: This approach addresses the limitations of the Exact-Match Accuracy. The metric computes the percentage of tokens that intersect between the hypothesis and reference window comprising of n content words (not including punctuations and stopwords) on the left and right sides of the exact-matched target term. This is repeated for each of the exact-matched target terms.

- Terminology-biased TER: TER (Snover et al., 2006) is an automatic metric based on edit distance. It shows how much a human would have to edit a machine translation output to make it identical to a given reference translation. The authors propose a modified version of TER that applies more penalty to the terminology token-based errors than the other tokens. The authors call it TERm and set the edit cost Cterm to 2 unlike the value of 1 in the original version.

Experiments and Results

Test Suite:

The TICO-19 test suite (Anastasopoulos et al., 2020) contains 2100 test sentences related to the COVID-19 domain. This test suite is annotated and the terminology match is verified by the Professional translators.

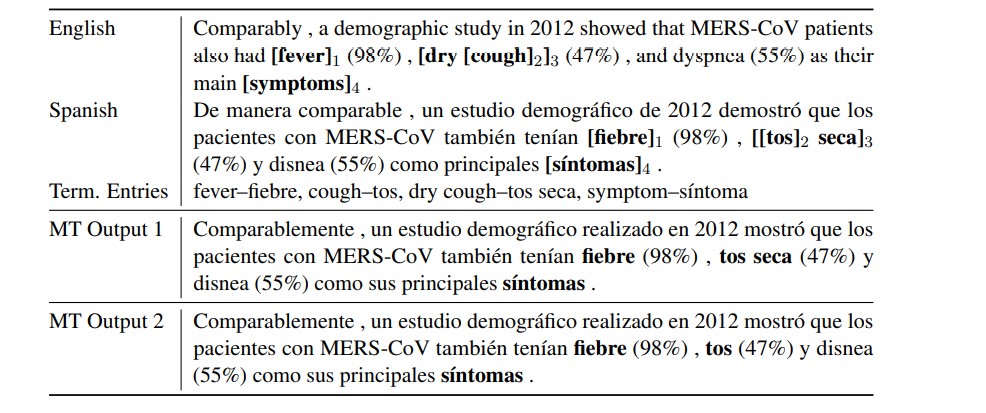

An example for term annotation on the dataset can be seen in the table below:

Table 1: Term annotation on the dataset for both source and target

NMT Models:

The OPUS-MT systems (Tiedemann and Thottingal, 2020) trained on the OPUS parallel corpora using the Marian toolkit (Junczys-Dowmunt et al., 2018) are used for evaluating the English<->Russian and English<->French language directions.

Pre-trained fairseq models are used for evaluating the English->Russian (Ng et al., 2019) and English->French (Ott et al., 2018) translation tasks.

The NAVER many-to-one multilingual NMT model (Berard et al., 2020) is used for evaluating the French->English translation task.

The “cheated” translations are the ones where not-produced target terms were appended at the end of the output for each system.

Evaluation:

Automatic Evaluation - All of the NMT systems are evaluated using BLEU (Papineni et al., 2002) and the 3 terminology-specific evaluation metrics proposed in the paper.

Human-based annotation - The authors also conduct a small-scale human-annotation study on English-French and English-Russian MT outputs. The annotation is done by experienced bilingual speakers.

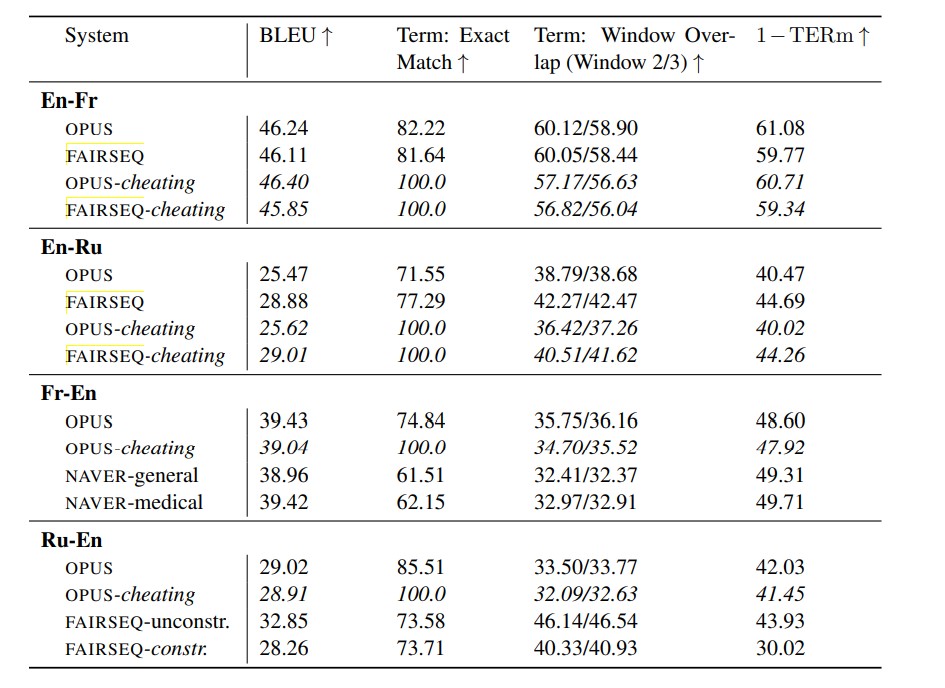

Table 2: Evaluation results as reported in the paper

Findings:

- From the results in table 2, it can be seen that BLEU scores don’t significantly penalize the “cheating approach”. On the other hand, the TERm metric does well because of the higher penalty involved with shifting operations.

- The TERm metric is more likely than BLEU to significantly penalize “cheated” systems. Based on the small-scale human annotation experiment, it can be seen that BLEU or TERm alone is inadequate to draw any conclusions.

- The window-overlap metric does well in penalizing the “cheating approach”. A combination of BLEU, exact-match accuracy and window-overlap metric can be used to adequately evaluate the terminology constraints of the NMT systems.

- The proposed metrics provide a holistic view that helps in distinguishing the translation systems based on both general and terminology-focused quality.

In summary

Alam et al. (2021) propose the following metrics: Exact-Match Accuracy, Window Overlap and Terminology-biased TER to evaluate the terminology consistency in MT outputs. Based upon experiments on different datasets across various languages and a limited human annotation study, they show that a combination of these metrics can be used to explicitly determine the MT systems that perform better in terms of terminology translation.