Issue #107 - When and Why is Unsupervised Neural Machine Translation Useless?

Introduction

Neural Machine Translation (MT) has engendered a great impulse in the machine translation industry by making MT useful in many use cases in which it wasn’t previously. However, in many low-resourced language pairs and domains, MT is still not viable due to a lack of parallel data. In this context, unsupervised neural MT, which requires only monolingual data, appears as the next revolution to push the frontiers of MT to a multitude of new applications. In this post we take a look at the paper by Kim et al. (2020), which studies the practical usefulness of unsupervised neural MT thus far and gives hints of research directions.

Approach

Kim et al. remark that in the literature, unsupervised neural MT is evaluated on high-resourced language pairs, such as French-English or German-English. In these language pairs, since large parallel corpora are available, unsupervised learning is not needed in practice. Unsupervised MT is needed when no parallel data are available in a specific language pair and domain. The aim of the paper is to study the performance of unsupervised MT in different scenarios with regard to the amount of parallel and monolingual data available and to the linguistic proximity of the source and target languages. They explore ten tasks in the following language pairs:

- German↔English: similar languages, abundant bilingual/monolingual data

- Russian↔English: distant languages, abundant bilingual/monolingual data, similar sizes of the alphabet

- Chinese↔English: distant languages, abundant bilingual/monolingual data, very different sizes of the alphabet

- Kazakh↔English: distant languages, scarce bilingual data, abundant monolingual data

- Gujarati↔English: distant languages, scarce bilingual/monolingual data

Comparison with Supervised Neural MT

Kim et al. first compare the performance of unsupervised neural MT to that of supervised neural MT (using parallel data) and “semi-supervised” neural MT (using parallel data and a synthetic parallel corpus obtained from back-translation, i.e. translating monolingual target data into the source language). Back-translation yields a 5 to 20% improvement in most language pairs. Unsupervised neural MT achieves readable translations only in two high-resourced language pairs (German↔English and Russian↔English), but their BLEU score is only half of the semi-supervised one. In Chinese↔English, Kazakh↔English and Gujarati↔English, unsupervised neural MT doesn’t achieve any meaningful model (BLEU score lies between 0.6 and 3).

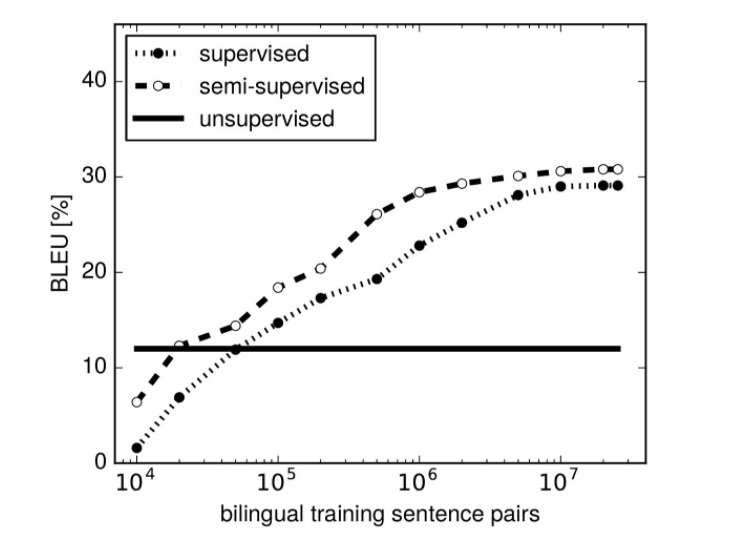

In another interesting experiment, the authors plot the BLEU score versus the size of the parallel training corpus in supervised and semi-supervised (with fixed synthetic parallel corpus) neural MT. The figure below is for Russian into English. We can see that in this language pair and data (WMT 2018), the BLEU score starts to plateau with a corpus size of about 5 million sentence pairs. This is an interesting curve to see what to expect in terms of BLEU score for supervised neural MT with a certain amount of parallel data. This curve also shows that in this task, with as little as 50,000 parallel segments, supervised neural MT outperforms unsupervised MT. For German-English, as little as 100,000 parallel segments are enough to outperform unsupervised MT.

Unsupervised Neural MT Parameters

The paper studies the best values for some parameters of the unsupervised neural MT model.

For German-English and Russian-English, it is observed that training with 1 million monolingual sentences already gives a reasonable performance, and results start to saturate with 5 million sentences. Also, having more data in a language even if fewer data are available in the other language doesn’t help. Thus the amount of monolingual data should be balanced between the source and target languages.

Another finding of the paper is that it is critical that the domain of source and target data match for unsupervised neural MT. For example, the performance of German↔English deteriorates down to -11.8% BLEU if the domains mismatch.

Finally, the results show the importance of the initialisation over translation training in current unsupervised neural MT. It is critical to have a good cross-lingual language model to initialise the model, as the current translation training won’t recover from a bad initialisation. A bad cross-lingual language model yields a poor cross-linguality in the encoder, which causes errors of copying the input into the output. Thus pertinent research directions are building language models which are able to handle dissimilar languages and domains equally well, and training methods to bootstrap out of a poor initialisation.