Issue #108 - Terminology-Constrained Neural Machine Translation at SAP

Introduction

Nowadays, Neural Machine Translation (NMT) has achieved impressive progress for most of the common language pairs, when enough training materials are available. However, the output is still not as promising for many cases of specific domains that are handled daily by the translation industry. How to enable NMT to properly translate terminology has always been a challenge in production scenarios outside of a research setting. We previously discussed NMT terminology constraints in some earlier blog posts (#7, #79, #82). In this post, we will review a paper by Exel et al. (2020) that examines variations of the approach by Dinu et al. (2019) in an industrial setup. Their experiments studied how far this approach can help to overcome the terminology issue in NMT. The paper also provides an in-depth evaluation and analysis based on their outcomes.

Incorporating Terminology Constraints

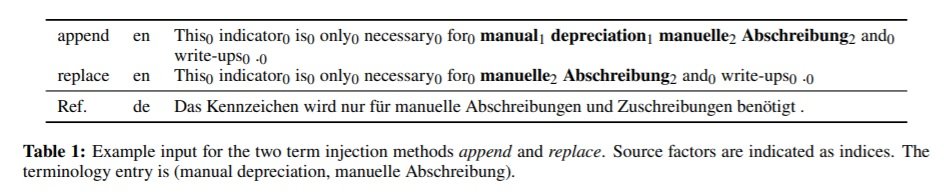

The main strategy applied in this paper is based on the approach by Dinu et al. (2019), in which the NMT model is trained to learn how to use terminology entries when they are provided as additional input to the source segments. In particular, terminology information is integrated as inline annotations in the source sentence, by either appending the target term to its source version (append), or by directly replacing the original term with the target one (replace). There are three possible values for the annotation: 0 for source words (default), 1 for source terms, and 2 for target terms. Table 1 shows an input example with annotations: The annotation source factors are represented by embedding vectors (experiment embedding sizes: 8 and 16) and these factors can be combined with respective word embedding by either concatenating or summing.

The annotation source factors are represented by embedding vectors (experiment embedding sizes: 8 and 16) and these factors can be combined with respective word embedding by either concatenating or summing.

To make the approach not only enforce the exact terminology but also learn its contextually appropriate inflections, a fuzzy matching strategy was used to find and annotate the terms in the data. Specifically, they lemmatised on the source side, and differences of two characters on the target side were allowed. In case of multiple overlapping matches, only the longest match was kept.

Experiments

The experiments were conducted with a transformer network on two language pairs: English-German (en-de) and English-Russian (en-ru). The training, validation and test sets are 5 million, 2 thousand and 3 thousand parallel segments respectively. Two test sets were used for the evaluation, where one contains at least one terminology entry per sentence, whereas the other does not have any terminology annotated. Furthermore, the authors applied Sockeye’s implementation of constrained decoding to a baseline without injected terms and source factors to compare its performance with their experiment results.Results

For both language pairs, the terminology-constrained models perform better than the baselines in terms of term rate and translation quality: +4.34 BLEU scores for en-de and +11.23 for en-ru. Note that the big difference in the results can be explained by the test sets that differ in sentence length and grammatical complexity. According to the human evaluation, the best-performing models can reach a term accuracy rate of 86% as excellent translation for en-de and 80% for en-ru.Observations

- In general, the append method works better than the replace method.

- Looking only at the append method results, concatenation of the two embedding vectors (subword embedding + source factor embedding) works better than summarisation.

- The source factor embedding is not very useful in both cases, the model can handle quite well the copy behavior of the injected terms to the output without requiring additional input signals.

- Compared to constrained decoding, the overall translation quality of this terminology-constrained strategy is better without any negative impact on translation speed.

- Terminologies are integrated smoothly into the context of the target language using correct morphological forms.

- In the case of German, single terms can build natural compound words, nominal terminologies are enforced to have less over-compoundings.

- Around 4% of terminology terms failed to be matched. According to the human assessment, around 45% of these unmatched terms are mostly either terms in an inflection form or terms in a compound word that escape the fuzzy matching rules.