Issue #112 -Translating markup tags in Neural Machine Translation

Introduction

Text to be translated is often encapsulated in structured documents containing inline tags in different formats, such as XML, HTML, Microsoft Word, PDF, XLIFF, etc. Transferring these inline tags into the target language is not a trivial task. However, it is a crucial component of the MT system, because a correct tag placement ensures a good readability of raw MT, and saves effort if post-editing is performed. Today, we take a look at a paper by Hanneman and Dinu (2020) comparing different tag placement approaches. They show that letting the neural MT model learn tag placement may be better than the commonly used detag-and-project approach.

The detag-and-project approach is the baseline and consists of removing the tags before translation, and projecting them after translation using word alignment. For example, if a tag encloses a source word w1, this tag should enclose the translation of w1 in the target language. Thus it should enclose the target word aligned to w1. If a tag encloses a phrase w1, …, wN, this tag should be placed between the first and last positions of the words aligned to the source phrase words.

Data Augmentation via Tag Injection

In Hanneman and Dinu’s approach, the tag placement is learned by the neural MT model. Since the vast majority of available training data is in plain text format, they augment the training data with tags. This is performed by tag injection. Tags are injected around corresponding source and target sentence fragments found in the training corpus. Corresponding fragments are identified using the hypothesis that, if the out-of-context translation of a sentence fragment is found in the target sentence, then those text fragments are aligned. For example, if an MT engine translates b into y, then b and y are corresponding fragments and we can inject the following tag structure:a <t>b</t> c

x <t>y</t> z

To account for not well-formed tagged text, there are also fixed probabilities to not produce the opening or closing tag. A fixed probability that the tag will be injected as self-closing rather than a pair, i.e. as “a <t/>b c” or “a b<t/> c”, is also introduced. Finally, the injection of multiple tags into the same parallel segment is allowed via a parameter that specifies the maximum number of tag pairs to insert per segment.

In the experiments described in the paper, XLIFF tags are used. Four tags are injected in accordance with the XLIFF 1.2 specification: <g>...</g> for a paired tag, <x/> for a self- closing tag, <bx/> for a tag that opens without closing, and <ex/> for a tag that closes without opening. The tag injection is performed in data sets of three language pairs (en-fr, en-de and en-hu) and evaluated by human judges. The results of the evaluation show that tags are correctly placed in 93.1% of cases in en-de, 90.2% in en-fr, and 86.3% in en-hu. The rates of actually wrongly placed tags are 3.5%, 5.8%, and 6.1%, respectively, with the remaining tags being judged as impossible to place or as unclear.

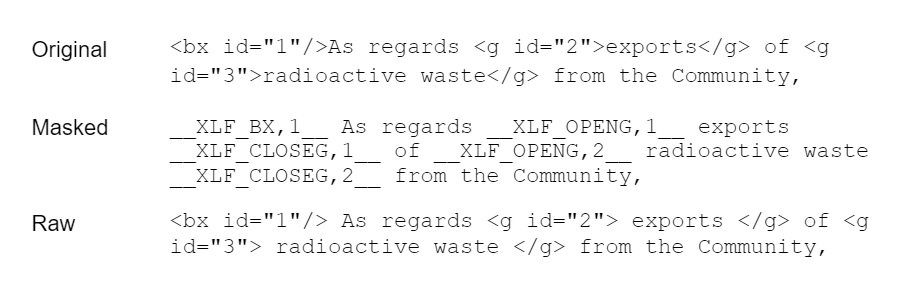

Masked and raw representation

Tags are either replaced by generic placeholder tokens (masked representation) or left as-is (raw representation). An example of each representation is given below. In the masked representation, a different placeholder name is given to each of the five XLIFF tag names present in the data. A sequence number is also included in the mask token, so that the original content can be matched with the correct placeholder in the target side. These sequence numbers start with 1 in each sentence pair and are incremented individually for each of the placeholder names, from left to right in the source sentence. To ensure that the placeholders and raw tags are translated correctly, self-translating examples are added to the training data.

In the masked representation, a different placeholder name is given to each of the five XLIFF tag names present in the data. A sequence number is also included in the mask token, so that the original content can be matched with the correct placeholder in the target side. These sequence numbers start with 1 in each sentence pair and are incremented individually for each of the placeholder names, from left to right in the source sentence. To ensure that the placeholders and raw tags are translated correctly, self-translating examples are added to the training data.

Results

A number of extrinsic and intrinsic evaluations of the different approaches are presented in the paper, including a human evaluation of the tag placement on subsets of each test set used, all extracted from EUR-Lex, the European Union’s online repository of legal documents. For the human evaluation, the authors selected the best variant of each approach according to the evaluation with automated metrics, that is the detag-and-project baseline, the masked augmentation on 15% of the training corpus, and the raw augmentation on either 1% (en–de, en–fr) or 10% (en–hu) of the training corpus. Separate human judgements are reported for tags with indexes 1 or 2 (more frequent) and tags with indexes greater than 2 (less frequent, and thus with less training examples).

For the low-index tags present in the augmented training data, in all language pairs, the raw approach increases the percentage of well placed tags (by 1.1 to 3%) with respect to the detag-and-project baseline, and the masked system outperforms the raw one in all language pairs (by 0.2 to 0.9%). Thus the placement of low-index tags is learned quite well. For high-index tags that appear in training only as self-translated examples, the results are not so clear. The detag-and-project method works best in en–de and en–hu. The masking approach is ranked second in all language pairs. The raw tag method does well in en–de and en–fr but is unusable in en–hu.