Issue #135 - Recovering Low-Frequency Words in Non-Autoregressive NMT

Introduction

Non-Autoregressive Translation (NAT), in which the target words are generated independently, is raising a lot of interest because of its efficiency. However, the assumption that target words are independent of each other leads to errors which affect translation quality. In this post we take a look at a paper by Ding et al. (2021) which confirms findings that low-frequency words are the most affected, and proposes a training method to boost the translation of such words.Knowledge Distillation

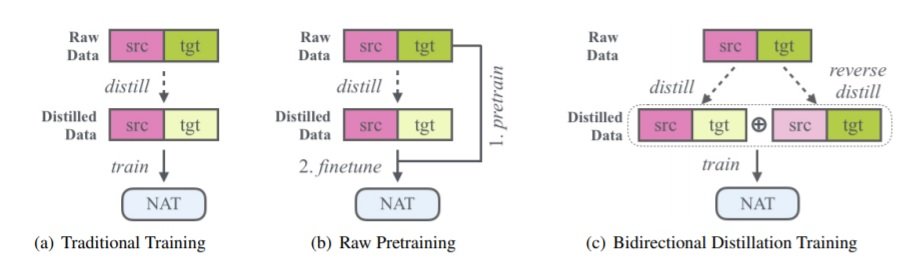

NAT models generate the target words in parallel instead of one after the other. As a consequence, they cannot capture the dependencies between target words. They have trouble translating a source phrase having several possible translations because in this case, the rest of the translation depends on the first words. A way to mitigate this problem is to perform Knowledge Distillation (KD): training a so-called teacher model, which generates data which is used to train a so-called student model. Usually the teacher model is a standard autoregressive neural machine translation (AT) model used to translate the original source training data. The student model is trained on the original-source, translated-target, training corpus (the s→t distilled corpus, see Figure (a)). The distilled corpus is a simplified version of the original data in which low-frequency words have been replaced by high-frequency ones. The number of translation options for a source phrase is thus reduced, which is appropriate to train NAT models. On the other hand, it increases the imbalance between high-frequency and low-frequency words. Important information related to the low-frequency words may thus be lost, causing errors when predicting low-frequency words. In this paper, Ding et al. propose simple ways to recover this information and improve the translation quality of NAT models by improving the precision of the translation of low-frequency words.

Experiments

The first proposed method is to pretrain the NAT model on the original data, and then fine-tune it on the s→t distilled corpus (Figure (b)). Since the low-frequency words have been seen in the original data, their translation is more accurate. Furthermore, the fine-tuning with the distilled corpus ensures the number of translation options is reduced.

The idea of the second proposed method comes from an experiment in which the source-to-target and target-to-source word alignments of the low frequency words are manually evaluated for the original and s→t distilled corpora. The conclusion is that source low frequency words are aligned with a higher recall and precision in the distilled corpus, while target low-frequency words are aligned with a lower recall and precision in the distilled corpus. The authors repeat the experiments with a t→s distilled corpus (translated-source, original target). As expected, in the t→s distilled corpus, the target low-frequency words are better aligned than in the original corpus. Thus a way to recover the information lost by s→t distillation is to combine it with t→s distillation. The second method consists of training the NAT models on the concatenation of the s→t and t→s distilled corpora (Figure (c)). This method can be refined by pretraining the model on the original corpus, and fine-tuning on the concatenated distilled corpora. To make sure the number of translation options will be eventually reduced, a further refinement consists of fine-tuning the model on the s→t distilled corpus only. The ideas behind different training schemes are illustrated on the figure below.

These methods are tested on four widely used WMT test sets in 4 language pairs English-German (en-de), Romanian-English (ro-en), Chinese-English (zh-en), and Japanese-English (ja-en), and evaluated with BLEU score.

Results

Detailed results for each training configuration are reported for the en-de task. Pretraining on the original data followed by fine-tuning on the s→t distilled corpus provides some improvement, both in BLEU score (0.5 points) and low-frequency word translation accuracy (ALF, +2%). Combining pretraining and the concatenation of s→t and t→s distilled corpora is better than the baseline, but not as good as this first method. However, pretraining followed by fine-tuning with the concatenation of s→t and t→s distilled corpora, followed by fine-tuning with the s→t distilled corpus only, achieves the best results. This method is named “Low-Frequency Rejuvenation” (LFR). Including the t→s distilled corpus during training thus seems to be useful, but these results suggest that it is important to always finish with fine-tuning with the s→t distilled corpus only, in order to reduce the corpus ambiguity.

In the other language pairs, LFR is compared to the baseline consisting of using the s→t distilled corpus for training. LFR yields a BLEU score improvement in the range 0.6-1 and an ALF improvement of about 3-4 %.

The authors also conduct a fine-grained analysis on lexical choice. They conclude that the majority of improvements on translation accuracy is from the low-frequency words. They also observe that LFR generates translations that contain more low-frequency words.