Issue #180 - Detecting Over- and Under-translations with Contrastive Conditioning

Introduction

A translation should accurately convey the information in the source sentence without adding or omitting information. However, identifying additions and omissions without a reference translation can be challenging. In this week’s post, we look at work on detecting this type of error by Vamvas & Sennrich (2022).

Background: omission and addition errors

Two types of coverage errors are particularly common in machine translation (MT). A translation may add content that is not present in the source: an addition error, or over-translation. More commonly, there may be content missing from the translation: an omission error, or under-translation.

For example, an accurate translation “Please exit the plane” might correspond to a translation output “Exit the plane after landing”. The translation adds “after landing” and omits “Please”.

If we have an accurate reference translation, additions and omissions can be detected using word alignments and n-gram overlap with the reference. However, the authors of this paper focus on reference-free omission and addition detection.

Contrastive conditioning for omission and addition errors

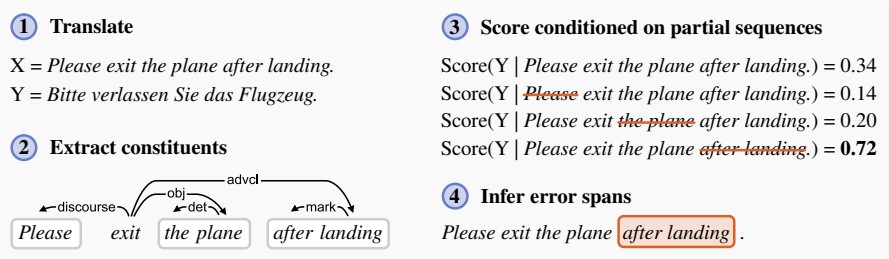

Vamvas & Sennrich (2022) suggest that a translation without over- or under-translation uses “as little information as possible and as much information as necessary.” They infer these properties by scoring the translation under a neural MT model, conditioning on either the full source sentence or on contrastive partial sentences.

The contrastive sentences are produced by deleting short constituents from the full source. A translation that is more likely given some partial source than given the full source may have an omission error. The score difference suggests the deleted constituent should have been present in the translation.

The contrastive sentences are produced by deleting short constituents from the full source. A translation that is more likely given some partial source than given the full source may have an omission error. The score difference suggests the deleted constituent should have been present in the translation.

To find addition errors, the authors perform the same process in reverse. They delete short constituents from the translation, and score the full source conditioned on partial translations. If the source is more likely given a partial translation, the full translation may contain an addition error.

To find addition errors, the authors perform the same process in reverse. They delete short constituents from the translation, and score the full source conditioned on partial translations. If the source is more likely given a partial translation, the full translation may contain an addition error.

The authors identify constituents for deletion through a dependency parse. A deletion candidate:

- Is a complete subtree of the dependency parse

- Covers a contiguous sequence of words

- Contains a part of speech of interest: nouns, verbs, adjectives, numerals, adverbs, and interjections.

Experiments

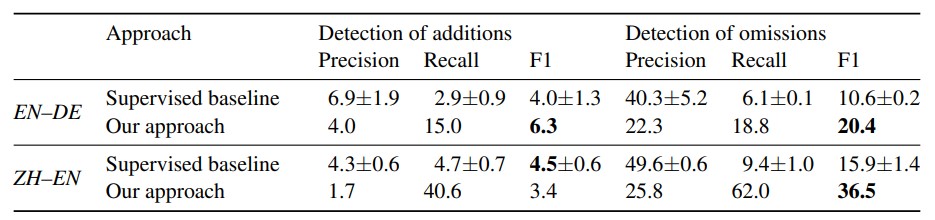

The authors use the mBART50 multilingual MT model to score sequences, and evaluate on English-German and Chinese-English annotated translations made available by Freitag et al. (2021).

To contrast with their reference-free approach, the authors train a supervised baseline. The supervised baseline requires a parallel coverage error dataset for training, which the authors create synthetically. They create partial sources by randomly deleting source constituents, then forward translate to produce full and partial translations. The full translations are over-translations of the partial sources, and the partial translations are under-translations of the full sources.

The authors’ approach significantly outperforms the baseline for detection of omission errors, although both approaches struggle with addition errors. With human evaluation of the results, the authors discover that many of the incorrect model-predicted addition or omission errors are indeed errors, although misclassified by the model.

The authors’ approach significantly outperforms the baseline for detection of omission errors, although both approaches struggle with addition errors. With human evaluation of the results, the authors discover that many of the incorrect model-predicted addition or omission errors are indeed errors, although misclassified by the model.

In summary

Vamvas & Sennrich (2021) explore two ways to evaluate MT for over- and under-translation. They find that a reference-free, contrastive conditioning approach outperforms a supervised baseline when detecting omissions.