Issue #182 - DYLE: Dynamic Latent Extraction for Abstractive Long-Input Summarization

Introduction

Automatic summarization is the task of processing a (potentially quite long) input document and outputting a summary that highlights the most relevant aspects of the document. Typically, there have been two approaches to this task using machine learning – (1) extractive summarization, which retrieves the most relevant sentences in a document that sums up its content and (2) abstractive summarization, which generates the text of a summary word by word. While extractive approaches have the advantage of retrieving source text verbatim from the input document, abstractive approaches often produce more fluent output (although they may not be firmly grounded in the input document).

Processing long input documents has been a challenge for automatic summarization, particularly for abstractive models. Several approaches have been proposed to address this problem, including augmenting Transformer models to more efficiently handle large inputs (Beltagy et al., 2020), hierarchical techniques for processing whole documents (Rohde et al., 2021), and “extract-then-generate” methods that first run extraction models over a long document and then re-write these extracted segments using a sequence-to-sequence model (Zhang et al., 2019).

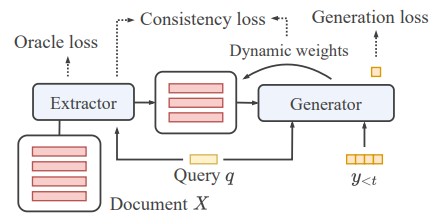

In today’s post, we look at the ACL 2022 paper by Mao et al. (2022) “DYLE: Dynamic Latent Extraction for Abstractive Long-Input Summarization”, where they present a technique for abstractive summarization of long documents based on the “extract-then-generate” framework. However, the paper introduces several key improvements that allow them to jointly train both the extraction and generative components of the model, allowing for both components to be tuned to the domain of the training set. A depiction of the overall model is shown in Figure 1 below.

Figure 1: Overview of proposed DYLE model architecture.

The main contributions from the authors are:

- Reduction of computational cost of processing extremely long documents using Transformer models

- A multi-task training regime that allows both the extraction and generative components of the model to be trained simultaneously

- Results show state-of-the-art performance on several long-document summarization datasets

Background and Related Work

Extract-then-Generate Summarization

Abstractive summarization systems rely on sequence-to-sequence neural architectures, which often have a hard limit on the number of input tokens they can process at once. Additionally, the decoders for these models often struggle to generate salient information, and can even “hallucinate” information not present in the source article. This presents a major drawback for summarizing long input documents such as lengthy formal reports or scientific publications. Extractive systems, on the other hand, more reliably produce factual summaries because they retrieve salient text directly from the input document. However, these summaries often end up sounding a bit clunky or disfluent.

One way to mitigate these problems is to essentially fuse both approaches in a two-step modeling process. The “extract-then-generate” approach first runs a pre-trained extractive model over an input document and retrieves a set of sentences relevant to summarization. These retrieved sentences are then fed to a sequence-to-sequence model that then effectively “rewrites” the extracted sentences using a generative model. While this technique seeks to combine the best of both extractive and abstractive systems, it often suffers from information loss, since the generative component does not have access to the full document context. Additionally, the extractive model often has its weights frozen while training the generative model, which prohibits the entire system from fully adapting to the training set.

Methods and Approach

DYLE Modeling Overview

In their work, Mao et al., (2022) build upon the extract-then-generate approach but propose several modifications. The first is to allow both the extractor and generator to have their weights updated during the training process. While previous work has relied on reinforcement learning to train both components of extract-the-generate systems, the authors propose a clever workaround by sharing hidden states from the extractor to the generator. This approach allows the entire model to be trained with maximum likelihood estimation, which is much more computationally efficient than most reinforcement learning techniques. However, several details are required to make this technique work, which we will cover below.

Text Extraction from Long Documents

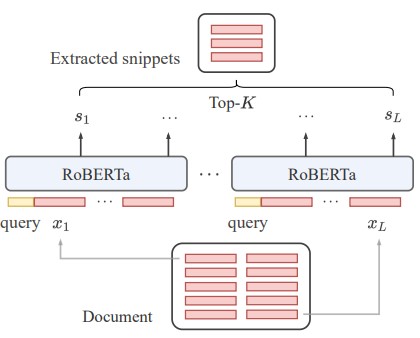

Extractive summarization works by training a model to identify individual sentences in a document that should be present in the document’s summary. This is implemented as a binary classification problem, where each sentence in a document is labeled with a label of “should appear in summary” or “should not appear in summary”. At inference time, an extractive model can then retrieve a set of top- k sentences, ranked by the probability assigned by the extractive classifier. Figure 2 below shows a schematic of a typical extractor model.

Figure 2: RoBERTa-based extractor model used by Mao et al.

While this is a straightforward approach, long documents prohibit these extractor models from processing all sentences at the same time due to computational and memory requirements. To mitigate this problem, the authors group several sentences into chunks that are then processed and eventually fed to the generator.

Sharing Extractor Hidden States

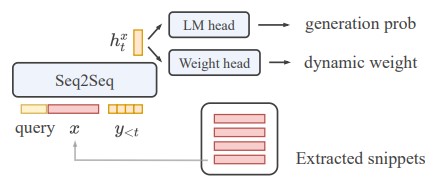

While previous “extract-then-generate” systems utilize the sentences selected by the extractor as input for the generator, the authors propose a modification to this setup. Instead of requiring the generator model to ingest the raw words from the sentences selected by the extractor, the authors instead expose the final hidden states from the extractor, and these serve as the input for the generator. The hidden states can be thought of as the extractor encodings of the relevant sentences. An overview of this component of the system is given in Figure 3 below.

Figure 3: overview of the proposed generator model proposed in this work.

Thus, instead of being trained to maximize P(yt | x, yt−1) as normal, the generator model is trained to maximize P(yt | XK, yt−1) where XK are the top-k retrieved sentences encoded by the extractor model. In addition, the generator is also trained to weight each of the extracted sentences with an attention module. This ensures that higher weight is placed on more relevant extracted snippets.

Auxiliary Loss Functions

Finally, while the authors propose a useful technique for training both the generator and extractor modules simultaneously, there is still a danger of the extractor and generator modules diverging from one another. To combat this, the authors implement what they call a “consistency loss” to ensure that the hidden representations from the extractor and generator are close to one another. This loss is implemented as the KL-divergence between the output of the generator and the output of the extractor, and the model is trained to minimize this divergence.

The extractor is trained on the binary task of selecting the most relevant sentences for the source document, and the generator is trained on the cross-entropy loss between its generated outputs and the reference summary. Combining all of these training signals, then, the final loss for the entire system becomes:

Lηθ=λgenLθgen+λoracLηorac+λconsistLηconsist

Where the gen, orac, and consist subscripts refer to the generation, oracle extraction, and consistency losses respectively and θ refers to the generator parameters, while η refers to the extractor parameters. Each λ value is a unique free parameter that is tuned for each dataset and controls the weight for each of the component losses.

Experiments and Results

Datasets

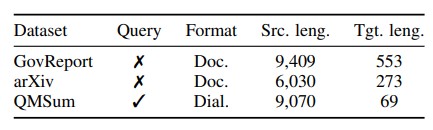

The authors look at performance on three datasets that all focus on long-document summarization:

- QMSum: a query-based meeting summarization dataset that covers multiple domains

- GovReport: a collection of 19.5k U.S. government reports with expert-written summaries

- arXiv: a collection of scientific articles collected from arXiv that utilize the article abstract as the summary

A breakdown of dataset statistics is given in Table 1 below. Average number of source and target tokens for each dataset are given in the two right-most columns. Of particular note is the large number input size for many of the datasets – often exceeding 9,000 tokens.

Table 1: Overview of datasets used in the authors’ experiments.

Experiments

For each dataset the authors compare against previously reported results which have utilized various Transformer-based architectures including BART, Longformer, and Pegasus. Interested readers should refer to the paper for more details on comparison models. All models are evaluated using the ROUGE metric, which combines word-level precision and recall measures to evaluate how closely a machine-generated summary corresponds to a gold standard reference summary.

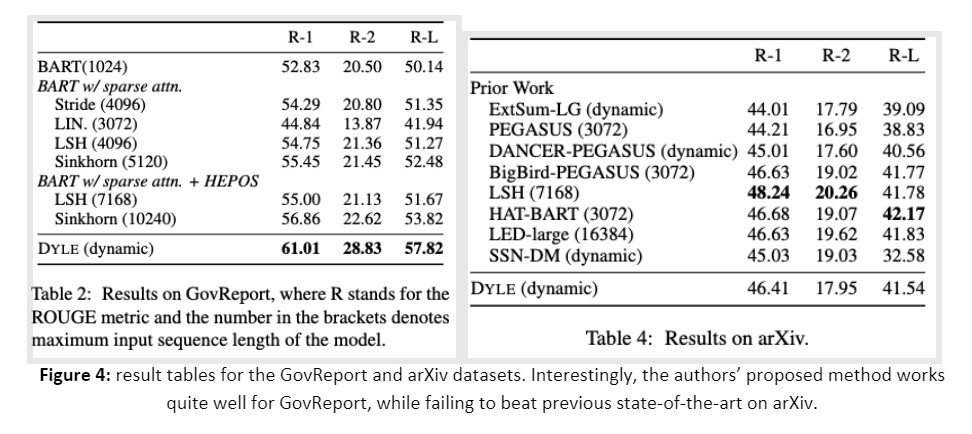

In-depth results for the GovReport and arXiv datasets are given in Figure 4 below (many results are reported in the original paper, however we focus on these results to save space and to highlight cases where the authors’ proposed method succeeds and fails). The authors note strong performance of their method on the GovReport dataset, achieving a 6.21 point absolute improvement in ROUGE-2 score over previous methods. However, their method fails to outperform several methods on the arXiv dataset. The authors posit that because the abstracts that serve as the summary references in this dataset are quite long and abstractive, their method struggles due to its reliance on extraction.

While these results show interesting cases where the authors’ proposed methods work and where they may fall short, there are many additional details in the proposed training method that may affect model performance. To assess the contribution of some of these details, the authors provide further analysis of (a) the number of retrieved sentences fed to the generator and (b) an ablation of the various losses used for training of their system.

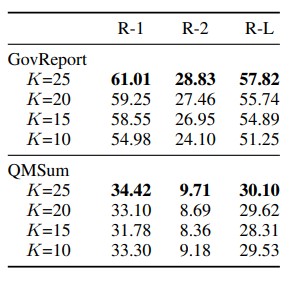

Table 2 below shows results for varying the number of retrieved sentences for the extractor portion of the model by sweeping over values from 10 to 25. Results show consistent improvement in performance at the highest value for K, indicating that greater input context is advantageous to model performance.

Table 2: Results for varying the number of retrieved sentences for the GovReport and QMSum datasets.

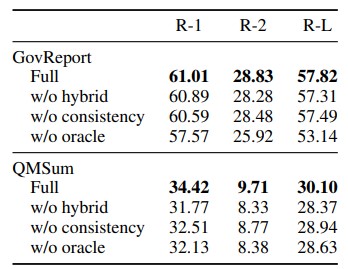

In Table 3 below, the authors systematically remove one of the losses from the model and compare this to the full loss described above. Starting from the bottom row of each sub-table “w/o oracle” removes the loss for the extractor module, “w/o consistency” removes the consistency loss that ensures the generator and extractor outputs are “close” to one another, and “w/o hybrid” augments the consistency loss to only consider the ground-truth extracted sentences without regard to the extractor outputs. The top “full” row indicates training with all losses.

Table 3: Ablation analysis of various loss functions used in proposed model.

For both datasets full loss training performs best, indicating that the component losses each contribute to downstream performance. Interestingly, results differ based on the dataset as to which ablation hurts performance most. For GovReport, removing the oracle training signal for the extractor hurts performance most, while for QMSum the “w/o hybrid” setting affects performance most. This indicates that, while the “full” loss performs best across datasets, different component losses may contribute more to performance depending on the training data.

In summary

To conclude, Mao et al. show how combining extractive and abstractive approaches for automatic summarization can boost performance for long documents. Additionally, the authors expand on previous “extract-then-generate” methods by proposing a system that can jointly train the extractor and generator modules simultaneously without the need for computationally expensive reinforcement learning techniques. Finally, while the authors’ method outperforms many previous techniques on several datasets, further work is needed to understand why their method falls short in highly abstractive settings such as the arXiv dataset. While this paper focuses on summarization, it’s an interesting example of combining signals from multiple models to improve text generation, which may be applicable to other cases such as question answering or translation.