Issue #63 - Neuron Interaction Based Representation Composition for Neural Machine Translation

05 Dec 2019

Introduction

Transformer models are state of the art in Neural Machine Translation. In this blog post, we will take a look at a recently proposed approach by Li et al (2019) which further improves upon the transformer model by modeling more neuron interactions. Li et al (2019) claim that their approach models better encoder representation and captures semantic and syntactic properties better than the baseline transformer.

Bilinear Pooling:

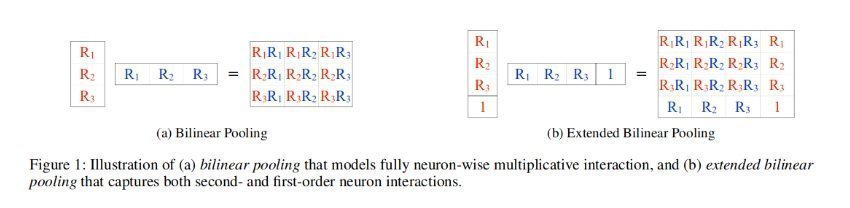

Bilinear pooling (Tenenbaum and Freeman (2000)) is defined as an outer product of two representation vectors followed by a linear projection. For the transformer model we have multi-layer and multi-head representation vectors. These vectors are concatenated to form the input to bilinear pooling. Bilinear pooling only encodes second-order (i.e., multiplicative) interactions among individual neurons, therefore, they proposed the extended bilinear pooling which also includes first-order representations. The figure below illustrates bilinear and extended bilinear pooling.

Experiments and Results:

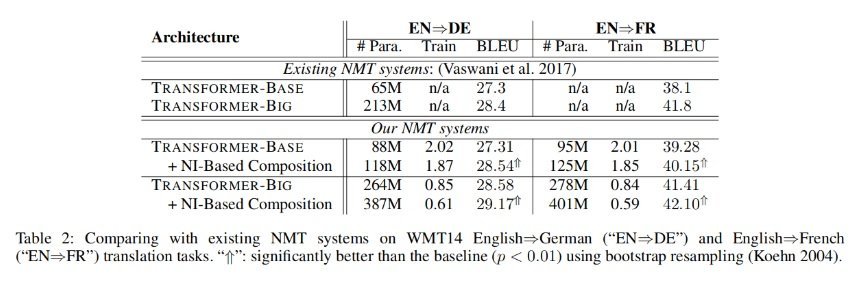

They conduct experiments on the WMT2014 English⇒German (En⇒De) and English⇒French (En⇒Fr) translation tasks. The En⇒De dataset consists of about 4.56 million sentence pairs. The En⇒Fr dataset consists of 35.52 million sentence pairs. They used newstest2014 as the test set. The table below shows that they obtained 0.6 to 1.2 BLEU better compared to the baseline transformer models.

Linguistic Results:

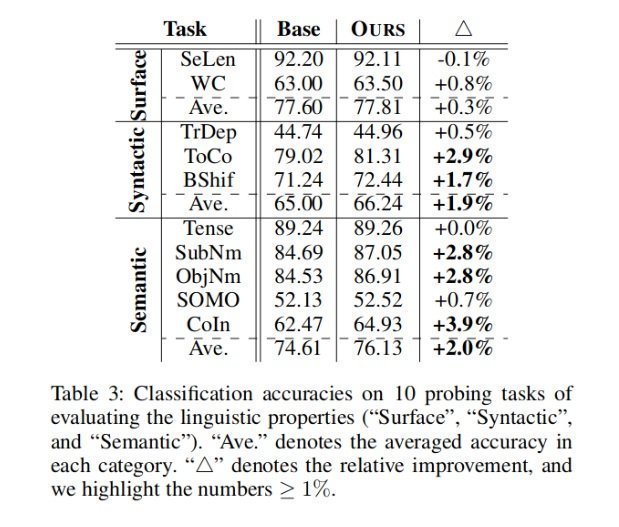

Another part of the evaluation was to see the impact of extended bilinear pooling on encoder representation. Conneau et al. (2018) designed 10 probing tasks to study what linguistic properties are captured by representations from sentence encoders. They used the same for evaluating linguistic properties. The table below presents the results on all 10 tasks. We can see that the approach obtained better results in eight out of 10 tasks.