Issue #68 - Incorporating BERT in Neural MT

Introduction

BERT (Bidirectional Encoder Representations from Transformers) has shown impressive results in various Natural Language Processing (NLP) tasks. However, how to effectively apply BERT in Neural MT has not been fully explored. In general, BERT is used as fine-tuning for downstream NLP tasks. For Neural MT, a pre-trained BERT model is used to initialise the encoder in an encoder-decoder architecture. In this post we will discuss an improved technique incorporating BERT in Neural MT aka BERT-fused model proposed by Zhu et. al., (2020).

BERT-fused model

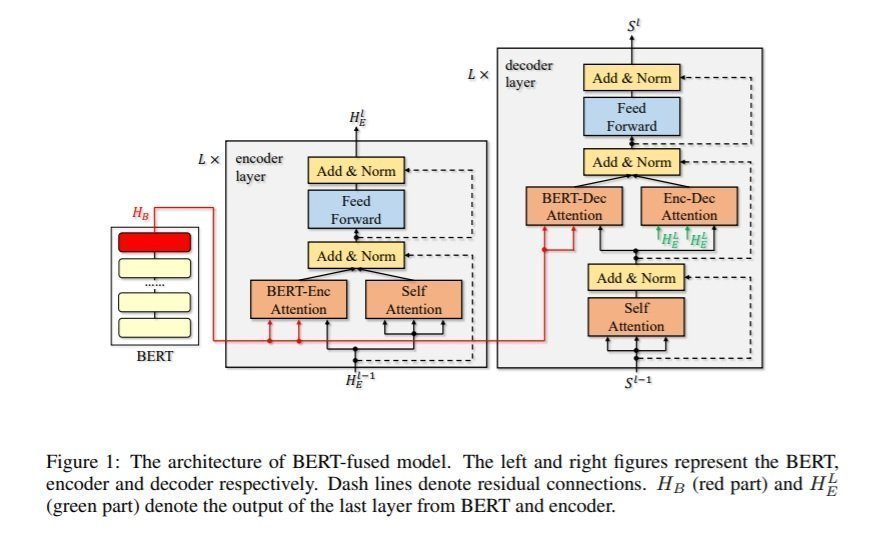

Zhu et. al., (2020) have proposed a modified encoder-decoder architecture in which they first use BERT to extract representation for the input sequence, and then this representation is fused in each layer of the encoder and decoder of the NMT model using cross attention, as depicted in Figure 1. In both Bert-enc, and Bert-dec attention, the Key (K), and Value (V) are created using BERT representation. For BERT-enc, the Query (Q) is generated by transforming encoder representation, whereas the self-attention output is used in Bert-dec attention.Drop Net Trick

Inspired by dropout and drop-path, Zhu et. al., (2020) proposed drop-net, which is utilised to decide how much information from BERT representation is to be used compared to that of the information generated by the NMT encoder and decoder. Given the drop-rate pnet ∊ [0,1], any layer with probability pnet/2, either the BERT-enc/dec or self-attention (output from NMT encoder/decoder) is used; with probability (1-pnet) both (BERT-enc/dec and NMT-enc/dec) attentions are used. For mathematical details we recommend checking out the paper.Experiments and Results

Datasets For low resource scenarios, the authors used IWSLT’14 English↔German (En↔De), English→Spanish (En→Es), IWSLT’17 English→French (En→Fr) and English→Chinese (En→Zh) datasets. For the rich-resource scenario, they used WMT’14 En→De and En→Fr corpus.

Training Strategy They first train an NMT model until convergence, and then initialise the encoder and decoder of the BERT-fused model with NMT encoder, decoder weights. The BERT-enc/dec attentions are initialised randomly and then the BERT-fused model is further trained to obtain the final model. In low resource settings (IWSLT), they reported improvement ranging from 1.47 to 2.88 BLEU points. For rich resource settings (WMT’14), the proposed model outperformed the strong baseline by 1.65, and 0.82 BLEU points for En-De, and En-FR respectively.