Issue #86 - Neural MT with Levenshtein Transformer

Introduction

The standard Transformer model is autoregressive, meaning that the prediction of each target word is based on the predictions for the previous words. The output is generated from left to right, with no chance to revise a past decision and without considering future predictions of the words on the right of the current word. In a recent post (#82), we briefly introduced the Levenshtein Transformer, a model which relaxes the autoregressive assumption allowing a revision of the generated output. That post was mainly about constrained decoding. In the present post we focus on the Levenshtein Transformer model itself and highlight its potential in a translation workflow.

Levenshtein Transformer (LevT)

The Levenshtein Transformer (Gu et. al, 2019) is a partially autoregressive model based on the combination of insertions and deletions. This allows the same model to generate an output from scratch or to post-edit a partial output. In a way, this model is thus closer to a human translation process, in which humans may revise, replace, revoke or delete any part of their generated text.

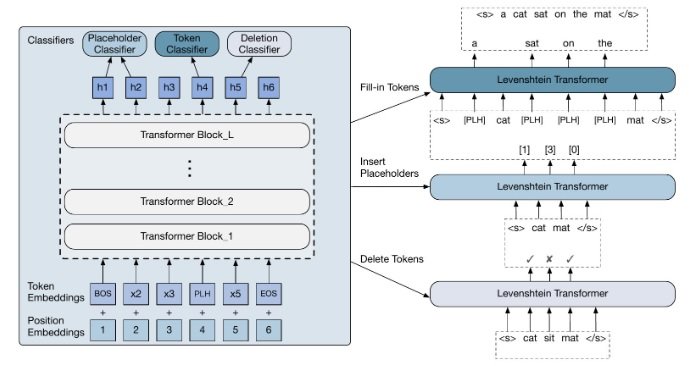

LevT is based on an encoder-decoder framework using Transformer blocks. However, the decoder is different from the standard Transformer decoder, as depicted in the following figure.  The output of the decoder network are three classifiers (left part of the figure):

The output of the decoder network are three classifiers (left part of the figure):

- Deletion Classifier: predicts for each token position, whether they should be “kept” or “deleted”,

- Placeholder Classifier: predicts the number of tokens to be inserted between every two consecutive tokens and then inserts the corresponding number of placeholder [PLH] tokens,

- Token Classifier: predicts for each [PLH] token an actual target token.

These classifiers are run sequentially to produce the output (right part of the figure).

The training algorithm, called “dual policy learning”, is based on the fact that insertion and deletion are complementary, but also adversarial operations. The idea is that when training one policy (insertion or deletion), the output from its adversary at the previous iteration is used as input. An expert policy, on the other hand, is drawn to provide a correction signal. This expert policy is derived from direct usage of ground-truth targets.

Inference is performed by greedy decoding (that is, the most probable prediction is chosen), as opposed to performing a search between several highly probable hypotheses, because search does not yield much improvement. This may be due to the fact that LevT inserts or deletes tokens dynamically, and thus can easily revoke the tokens that are found sub-optimal and re-insert better ones.

Machine Translation Results

LevT achieves comparable and sometimes better generation quality compared to the fully autoregressive baseline (the standard Transformer), according to BLEU score. However, it is much more efficient at decoding. Placing the deletion placeholder and token classifiers after 6 decoder blocks, the decoding is 3.5 times faster than for the standard Transformer. The authors also experimented placing the deletion and placeholder classifiers after an earlier block, while they kept the token classifier attached to the 6th block, considering the token prediction is more challenging. Placing the deletion and placeholder classifiers after the 1st block, the decoding speed-up reaches 5 times the standard Transformer speed, with only a loss of 0.5 BLEU score.

The above scores are obtained by using the same decoder weights to train all classifiers. It is also possible to train separate decoders for each operation, which increases the number of model parameters but does not affect the inference time. Gu et. al observe that weight-sharing is beneficial especially between the two insertion operations (placeholder and token classifiers). In addition, training distinct decoders for deletion and insertion operations yields another 0.5 BLEU score improvement. This result may indicate that insertion and deletion capture complementary information, requiring larger capacity by learning them separately.

Results in automatic post-editing show that LevT also outperforms the baseline transformer in this task.