Issue #172 - The Curious Case of Hallucinations in Neural Machine Translation

Introduction

Neural MT models can translate very well, but sometimes produce translations which seem to have nothing to do with the input. These translations are known as “hallucinations”. In this week’s post, we look at work on detecting and explaining hallucinations in machine translation by Raunak et al. (2021).

What are hallucinations, and what causes them?

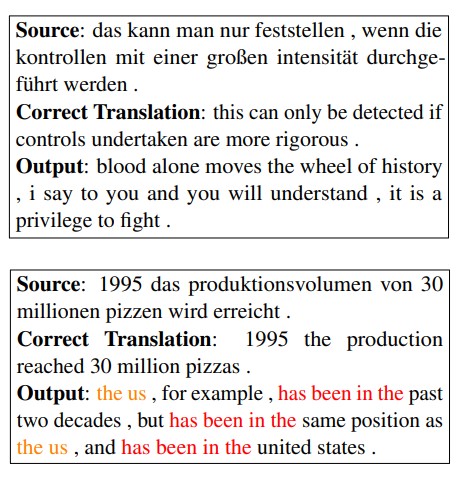

The paper defines hallucinations as machine translations that are completely decoupled from the input sentence. It focuses on two common types of hallucination: a fluent but “detached” output (top example) or a disfluent “oscillatory” output (bottom example).

A typical trigger for hallucinations is an input perturbation, meaning that there is something in the source sentence that the MT model is not expecting. This could be a mistake, such as a typo, or simply some unusual word in the input. Prior work by Wang & Sennrich (2020), which we discussed in Neural MT Weekly #152, has also found that hallucinations tend to happen when the input is out of domain relative to the model. That is, the input has a style or vocabulary that the model is not used to.

A typical trigger for hallucinations is an input perturbation, meaning that there is something in the source sentence that the MT model is not expecting. This could be a mistake, such as a typo, or simply some unusual word in the input. Prior work by Wang & Sennrich (2020), which we discussed in Neural MT Weekly #152, has also found that hallucinations tend to happen when the input is out of domain relative to the model. That is, the input has a style or vocabulary that the model is not used to.

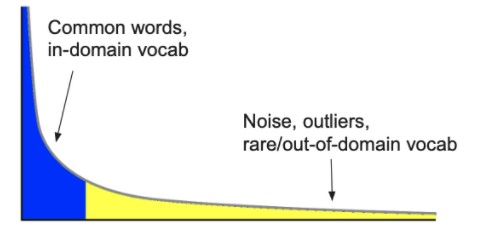

Natural language vocabulary typically follows a power distribution known as Zipf’s law which includes a few very common words and a long tail of rare words. Words in the long tail often carry a lot of meaning but could cause hallucinations. These perturbations and out of domain vocabulary both exist in the long tail of data the model might have been exposed to.

But these are natural variations of language – why do they cause such unusual behavior? The authors hypothesise that these outliers tend to be easily memorized by the model – they often appear only in very limited contexts and may only have a single translation. Sentences containing memorized outliers may be more likely to cause hallucinations if perturbed.

But these are natural variations of language – why do they cause such unusual behavior? The authors hypothesise that these outliers tend to be easily memorized by the model – they often appear only in very limited contexts and may only have a single translation. Sentences containing memorized outliers may be more likely to cause hallucinations if perturbed.

“Memorized” sentences are more likely to cause hallucinations

The authors test this hypothesis by calculating how far various sentences are memorized, and then comparing hallucinations when perturbing the most memorized sentences. They calculate memorization value (MV) as the average change in a translation metric when the model has or has not been trained on a sentence.

Say we train two models on sets of similar training data. The first set of training data includes a sentence of interest, the second does not. If both models produce similar translations for the sentence of interest after training, that sentence is probably not memorized, but can be translated by generalization from other training data. That sentence would have low MV.

Now take the example that the first model produces a high-BLEU translation for the sentence and the second produces a low-BLEU translation. This suggests the first model may have memorized that sentence and its translation only because it has seen them during training, as the model that had not seen it during training struggled. In this case the sentence has a high MV as measured by BLEU.

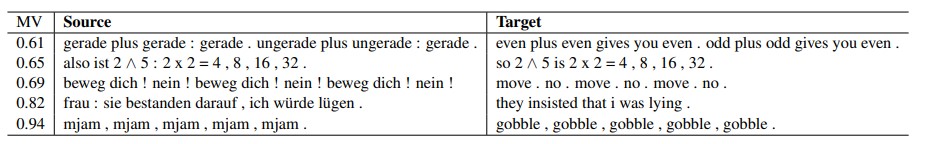

The table below shows examples of some German-English sentence pairs with a high MV, which tend to have unusual structure, rare vocabulary, or idiomatic language. It may be very hard to know how to translate these from other examples, giving them a high training loss. As a result, the model has a strong incentive to simply reproduce them exactly, wherever they appear.

Having found memorized sentences, the authors explore whether they lead to hallucinations. The authors perturb sets of 100 sentences by inserting one of thirty common words into the start of each sentence. Their baseline perturbs a set of random sentences. They compare with perturbing sets of the sentences with the highest MV as measured by various metrics, such as BLEU and the character-based metric chrF.

Having found memorized sentences, the authors explore whether they lead to hallucinations. The authors perturb sets of 100 sentences by inserting one of thirty common words into the start of each sentence. Their baseline perturbs a set of random sentences. They compare with perturbing sets of the sentences with the highest MV as measured by various metrics, such as BLEU and the character-based metric chrF.

They then look for hallucinations after translating each perturbed set. They find that only one or two hallucinations occur when perturbing the random set, but several hundred when perturbing the most memorized sentences as measured by chrF.

Corpus-level noise patterns can cause hallucinations

The authors also investigate hallucinations from corpus-level noise patterns. They find different noise patterns can have different effects:

- Misaligned training sentences can produce detached hallucinations, which are fluent but incorrect.

- If many source sentences are misaligned to the same target sentence, the model may learn to hallucinate that target sentence out of context.

- If the same source sentence is misaligned to many different target sentences, the model tends to produce oscillatory hallucinations.

Corpus-level noise can be related to synthetic training data generation. One common source of extra training data for machine translation is forward or back translation, where an existing MT model is used to translate sentences in one language to produce bilingual training examples.

However, hallucinations can be produced during this process, creating misaligned training sentences. The authors show that this can lead to “hallucination amplification”, where a model trained on hallucinations is more likely to produce further hallucinations.

What can we do about hallucinations?

Based on their findings, the authors make recommendations for mitigating hallucinations in machine translation:

- Augmenting the training data to move vocabulary out of the “long tail”.

- Filter the training data, especially synthetic data, to remove invalid sentence pairs that aren’t actually good translations.

- Use training algorithms that avoid memorization and are not too sensitive to high-loss training examples.

In summary

Hallucinations can have a serious impact on machine translation quality. Raunak et al. (2021) demonstrate some causes of this behavior, and make recommendations to reduce the risk of hallucinations in machine translation.