Issue #136 - Neural Machine Translation without Embeddings

Introduction

Nowadays, Byte Pair Encoding (BPE) has become one of the most commonly used tokenization strategies due to its universality and effectiveness in handling rare words. Although many previous works show that subword models with embedding layers in general achieve more stable and competitive results in neural machine translation (NMT), character-based (see issue #60) and Byte-based subword (see issue #64) models have also proven to be better in some particular scenarios. In this post, we take a look at work by Shaham and Levy (2021) which investigates a simple but universal byte tokenization strategy without embedding layers for NMT.Embeddingless model with byte tokenization

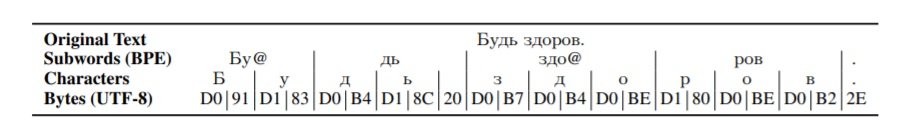

UTF-8 is an encoding standard for representing and handling text strings in any writing system using a variable number of bytes, and in total, there are only 256 unique bytes. So compared to the word, subword, and even character level tokenization, with byte tokenization, any language text can be represented by a universal and fixed vocabulary of only 256 token types. Wang et al. (2019) proposed to learn subwords on byte-level. In this work, they only use from 1 to 4 bytes to represent each character without subwords, and each byte is treated as a separate token as shown in Figure 1:

Figure 1: Subword, character, and byte tokens of the string “Будь здоров.”

Besides the tokenization strategy, the main difference of this approach is that the input and output embedding layers are removed from the traditional Transformer encoder-decoder architecture. Instead, they use one-hot encoding of the fixed byte vocabulary to represent text strings. So it will be a binary vector with 1 at the position of the corresponding character and 0 for the rest. Due to the nature of one-hot vectors, the dropout layers on the encoder input and decoder output are removed, but the dropout on the decoder input is applied. To predict the next token for an input of n tokens (each character could be represented by more than one byte/token), a softmax across each vector’s dimensions is applied on the output of the last decoder layer.

One of the advantages of this approach is that the model ends up with less parameters due to the reduction of embedding layers. However, the byte-based vectors increase the length of sequence representations, hence the memory consumption rises as well. As a side effect, the processing speed decreases compared to other methods.

Experiments and results

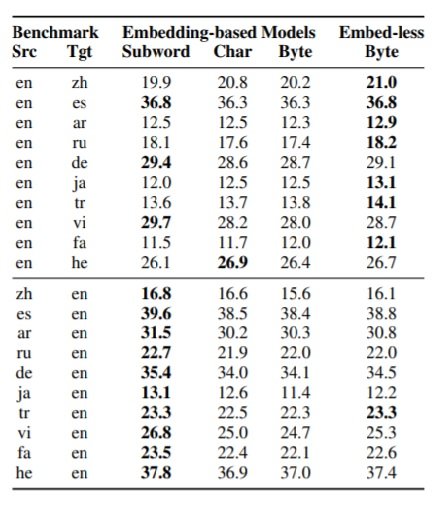

They trained byte-tokenized embeddingless models and standard models with Transformer architecture for 10 different languages (from and to English) on byte, character and subword level. All the training data are from IWSLT of sizes from 100K to 200K parallel sentences. The experiment results are shown in Table 1 below:

Table 1: BLEU scores of the baseline and embeddingless models on the IWSLT dataset.

According to the results, even with the reduction of embedding layers, there are less parameters compared to the standard structure, and surprisingly, it doesn’t significantly affect the performance. For the directions translated from English to others, in the most controlled setting, the byte-tokenized embeddingless models achieve even better performance in most of the cases. However, when translating from other languages into English, subword models surpass other models in all the cases. They claim that one possible reason could be that the data are originally from English to other languages. Besides, the regular tokenizer they used might be particularly good for English, but less so for other languages.

After the first experiments, the dropout on the decoder input tends to be an important factor of performance gains on byte-based embeddingless models. So they re-evaluate all the models applying token dropout as a potential hyperparameter. The results from English to other languages show that different models deliver similar performance, without any particular one dominating the others. However, for those directions from other languages to English, subword models consistently outperform the other models.