Issue #61 - Context-Aware Monolingual Repair for Neural Machine Translation

Introduction

In issue #15 and issue #39 we looked at various approaches for document level translation. In this blog post, we will look at another approach proposed by Voita et. al (2019a) to capture context information. This approach is unique in the sense that it utilizes only target monolingual data to improve the discourse phenomenon (deixis, ellipsis, lexical cohesion, ambiguity, anaphora, etc., which often require context to be determined) in machine translation.

Document-level Repair

They proposed a two pass machine translation approach. In the first pass, they obtain a context agnostic translation and in the second pass they feed translations obtained in the first pass through a document level repair (DocRepair) model to correct the contextual errors.

The DocRepair model is the standard sequence-to-sequence Transformer. Sentences in a group are concatenated to form long inconsistent pseudo-sentences. The Transformer is trained to correct these long inconsistent pseudo-sentences into consistent ones.

Training the DocRepair model

The DocRepair model is a monolingual sequence-to-sequence model. It maps inconsistent groups of sentences into consistent ones as mentioned earlier. Consistent groups come from monolingual document-level data. Inconsistent groups are obtained using round trip translation (Russian to English to Russian). Each sentence in a consistent group is replaced with its round-trip translation produced in isolation from context. Therefore, the model is trained to translate inconsistent Russian to consistent Russian.

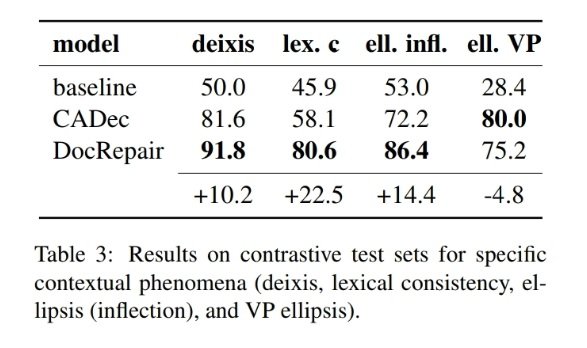

For training, they used English-Russian data from OpenSubtitles. They used contrastive test sets by Voita et al. (2019b) for evaluation of the discourse phenomena. They evaluated deixis, lexical cohesion, ellipsis (noun phrase) and ellipsis (verb phrase).

Results:

From the results we can say that DocRepair improves all discourse phenomena over the baseline and all except ellipses (VP) compared to CADec model. The CADec uses contextual parallel data instead of monolingual data used in DocRepair.

From the results we can say that DocRepair improves all discourse phenomena over the baseline and all except ellipses (VP) compared to CADec model. The CADec uses contextual parallel data instead of monolingual data used in DocRepair.

Similarly, in human evaluation the DocRepair model obtained better results compared to the baseline. On a test set containing 700 examples, in about 52% of the cases, annotators marked translations as having equal quality, in 35% of the cases, the DocRepair model was marked better and in 13% of cases, the baseline model was marked better.