Issue #120 - Interactive Neural Machine Translation

Introduction

Despite the great quality improvements achieved recently by machine translation (MT) technology thanks to neural systems, it is still not error-free. To achieve a controlled quality, the output of a machine translation engine must be corrected by a human agent in a post-editing phase. Today we take a look at a paper from Peris et al. (2017) (publisher version, non-final author version), which describes how to optimise this process by improving the neural MT hypothesis interactively, given the human correction. Although the neural MT technology used in the paper is not the state of the art anymore, the interactivity protocols described are still up-to-date. We finish the post by discussing how interactive neural MT can be revisited with the edit-based models proposed recently.

Interactive-predictive NMT (INMT)

Interactive-predictive NMT (INMT) is an iterative prediction–correction process: each time the user corrects a word, the system reacts offering a new translation hypothesis, expected to be better than the previous one. In standard NMT, generation is performed left to right, and at each step the neural network provides, for each word in the vocabulary, the probability of this word to be the next word given the current hypothesis. The search of the best hypothesis is performed via beam search. In INMT, in order to provide a new translation which takes the user feedback into account, the probabilities of the NMT model can be used without changes. What does change is the search for the best hypothesis given the NMT model probabilities, because the decoding is now constrained by the user's corrections. Two different interactive-predictive protocols are proposed by Peris et al.: the prefix-based and the segment-based protocols.

Prefix-based interactive-predictive protocol:

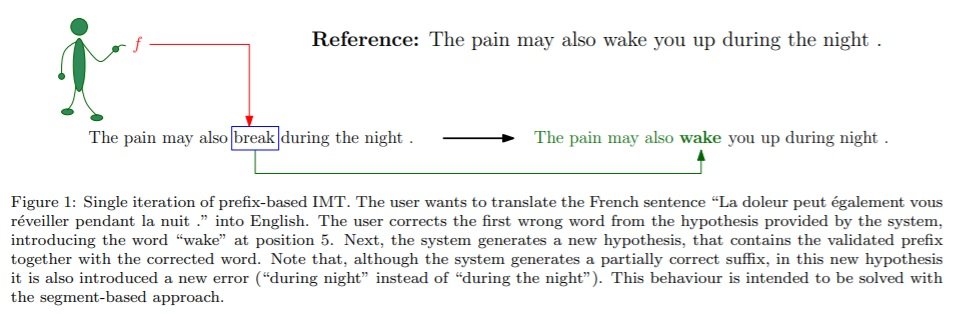

The user is forced to follow a strict left-to-right interaction (or right-to-left, for languages such as Arabic or Hebrew), as in Figure 1 below. A correction by the user of the i-th word conveys two pieces of information: it states the new content of the i-th target word, but it also validates the hypothesis up to this position (words 1 to i-1). The search of the best hypothesis given this feedback is thus constrained to the fact that the prefix in positions 1 to i is known. This constraint is easy to include: the probability of a word until position i is 1, and from position i+1, the probability is the one given by the NMT model. The prefix-based protocol is restrictive for the human translators because it forces them to perform the validation left-to-right. In addition, the translation constrained by a validated prefix can introduce new errors in the suffix, which have to be also corrected.

Segment-based interactive-predictive protocol:

The user is allowed to validate non-overlapping segments of the hypothesis, in addition to correcting wrong words. In this case, the search is reformulated as the generation of the optimal sequence of non-validated segments. In the next hypothesis, the order of the validated segments is conserved, but the positions of the validated segments are unknown beforehand. The search algorithm first estimates the length of the non-validated segments. To this end, it “looks ahead” into the next decoding steps. The non-validated segments are expanded until a maximum length L. Once the length of the non-validated segment has been estimated, words belonging to that segment are generated following the regular beam search.Character-level interaction:

It is useful for the user to be able to interact with the INMT system at character-level. However, character-based NMT is much slower than (sub)word-based NMT because the segments are longer. This would affect the system response time and could cause an inadequate user experience. To overcome this issue, the feedback signal is introduced by the user at the character-level, while the system internally works at the (sub)word level. Thus the correction can be at the u-th position of the i-th word of the hypothesis. The INMT system needs to generate a word constrained by positions 1 to u. This is performed by using a mask on the target vocabulary, which filters those words that are incompatible with this constraint.Unknown words:

The user will likely introduce out-of-vocabulary words. These words can be mapped to a specific token (such as “<unk>”), and then replaced by the original word introduced by the user. Another approach consists of splitting the unknown word into smaller, known, subwords.Results and discussion

The authors carry out an evaluation of their method on five different corpora, using metrics assessing human effort: the word stroke ratio (WSR) and the mouse action ratio (MAR). The NMT system used in the evaluation is a recurrent neural network. According to the results, neural models obtained significant improvements in effort reduction over a state-of-the-art phrase-based system. The segment-based protocol lowered the typing effort required by the user more than the prefix-based protocol.

Because decoding in interactive neural MT is based on a search constrained by the user corrections, this work connects to some novel ideas which make constrained decoding more natural and efficient. Recently, new classes of transformer models have been proposed, in which the output is not generated left-to-right, but with edit operations such as deletions, insertions or repositions (see post #86 and #118). In these models, you don’t need to re-generate the translation from scratch if you want to post-edit an hypothesis. The decoding can start from the current hypothesis and perform only the required edit operations. Thus constrained decoding for interactive neural MT just means starting the generation of the corrected hypothesis with the segments validated by the user, and letting the edit-based model generate the rest. The validated segments can be taken as hard constraints by preventing deletion operations on them.