The enormous growth in digital content fueling localization volumes has only been exacerbated by the pandemic. Spare a thought for the enterprise localization managers responsible for deciding which critical content needs translation and how the localization goals of their whole organization can be achieved within limited budgets and timeframes.

Luckily, the days when localization was an afterthought are coming to an end and long overdue changes are being made. As we reported in our 2019 Five Future States of Content campaign, effective content drives sales, generates leads and boosts brand awareness. Companies with a global footprint now understand the value that localization can have on revenue and brand, helping them to draw up targeted localization plans in line with their content needs.

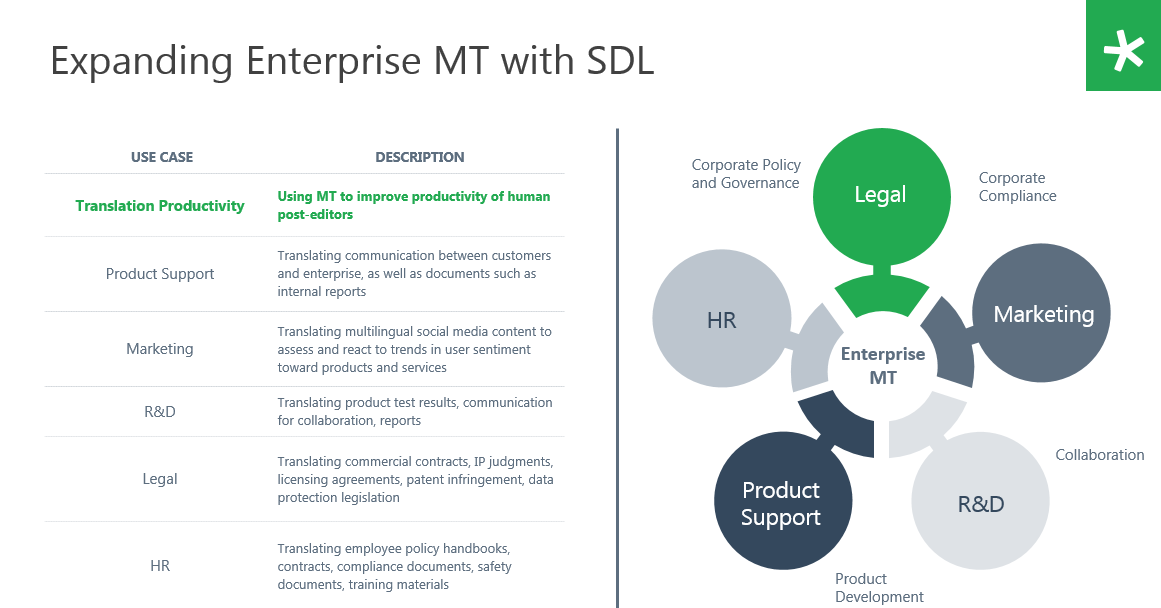

We also see a shift from a purely quality-centric to a holistic content approach. This is where a strategy with a focus on machine translation (MT) first is coming in, championed by a new wave of tech savvy localization managers. As we know, MT technology can be useful for a huge variety of content, from helping to digest product reviews or support queries from various target markets to monitoring social media commentary. There is valuable multilingual data everywhere that requires some form of understanding but not necessarily warrants an expensive human-based translation process.

We have known for some time now that there is no way to deal with this amount of content through traditional translation and companies increasingly use a hybrid approach, combining

MT with human editing or in some cases pure MT for some content. This mixed approach is gaining in popularity. For us, the question is how to empower localization managers to implement a process that addresses all their content needs. Which tools or processes do they need?

Owning the data challenge

We know that translating content instantly increases its reach. We also know that human translation alone is not the answer to the content challenge. Data is being created all the time and we need a holistic solution to cross language barriers. One way to address the data and content challenge is technology. Some companies already use smart technology, not simply to understand and harness content but to gain rapid insight and decide on appropriate actions. Not for the first time are we seeing technology being used to augment the scale of human skills and intelligence.

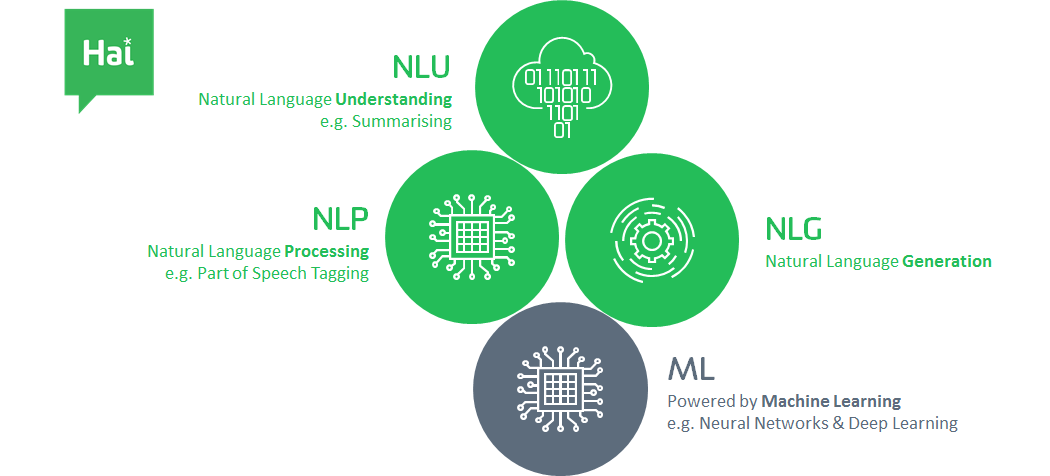

The legal profession has already been using Natural Language Understanding (NLU) tools to triage documents and reduce the time in which legal cases are prepared, thus allowing legal professionals to focus on the correct documents. In the case of multilingual documents this ensures that cost for translation stays low as only requisite document are translated. This ability to summarize could well serve other purposes within the enterprise space, allowing stakeholders to reach important insights faster.

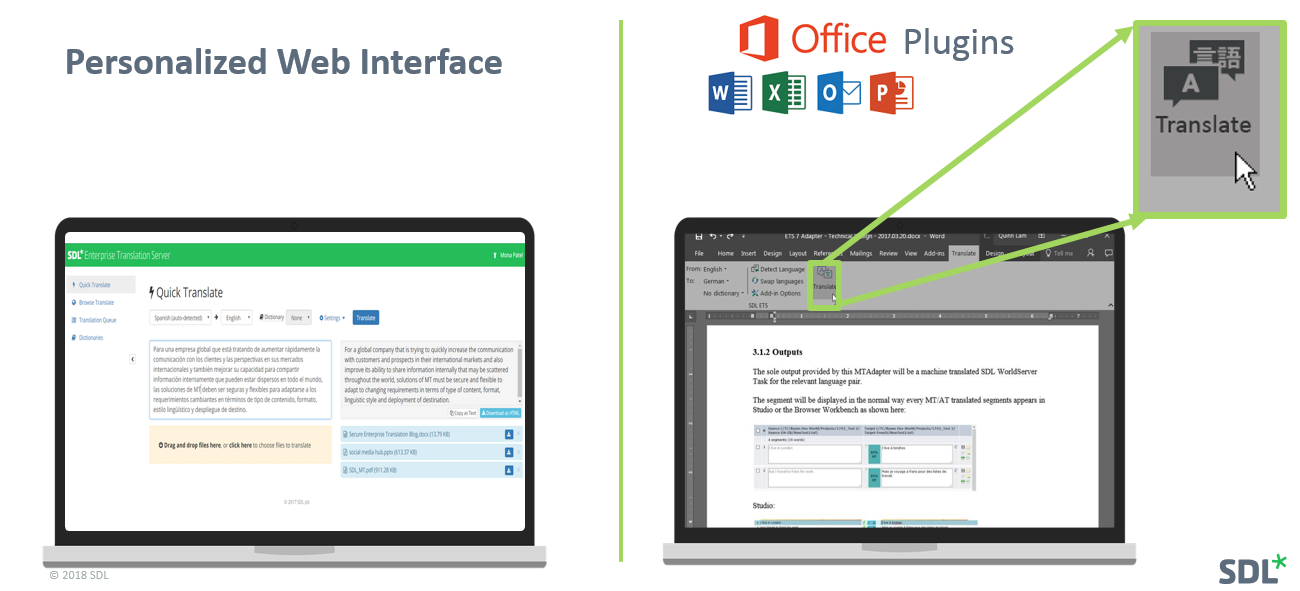

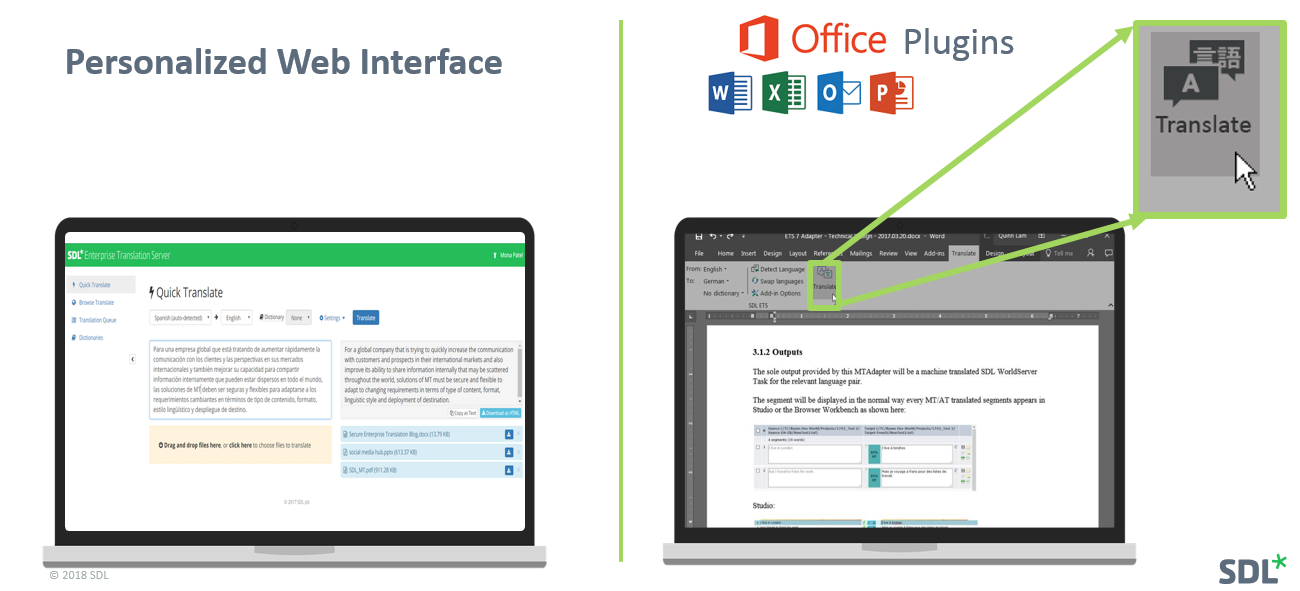

Enterprise content can be owned by a multitude of internal stakeholders and needs to be looked at with a view of engaging the customer base. Content should not be siloed but ready for use, re-used and shared for greater collaboration. In addition, confidential content requires a secure enterprise solution. One idea is to empower localization managers to allow them to act as a hub for multilingual content within the enterprise. These are professionals who understand not just language but even more importantly the value of language. Their innate language background and customer-oriented approach to content could serve as an ideal foundation for the holistic approach we are looking for. Secure MT systems, as opposed to consumer grade free translation tools can help to increase company knowledge and collaboration as well as provide integrated tools to enhance productivity, as shown in the image below.

The ability to be at the intersection of collaboration through technology is already with us in the form of our secure MT Cloud solution. Centralizing the language challenge means companies can quantify their needs and decide on a holistic strategy. This very much reflects the developments in other parts of the delivery chain where technology is becoming crucial. Providing localization managers with more structure and tools will lead to more control and governance on how to deal with content, fine-tune resource allocation and meet the content challenge head on.

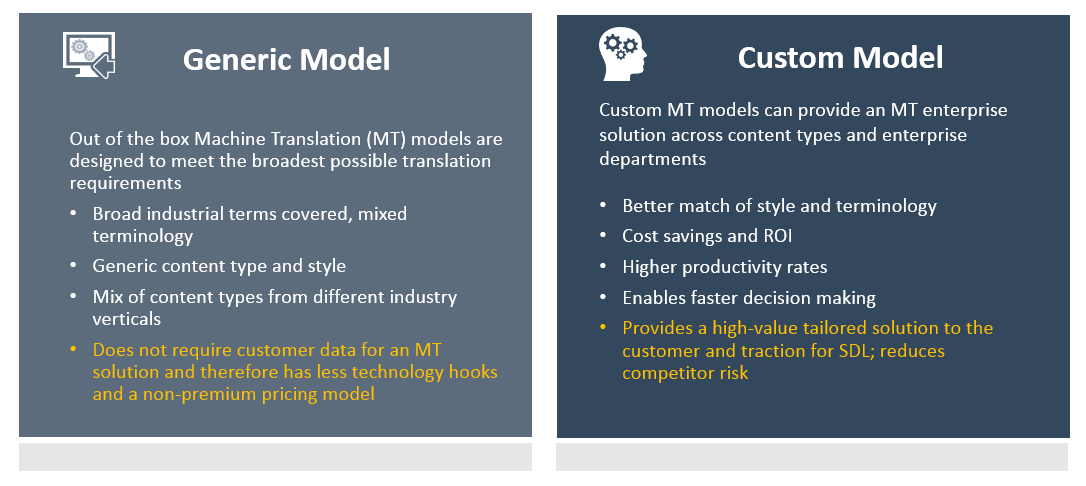

Custom vs generic models

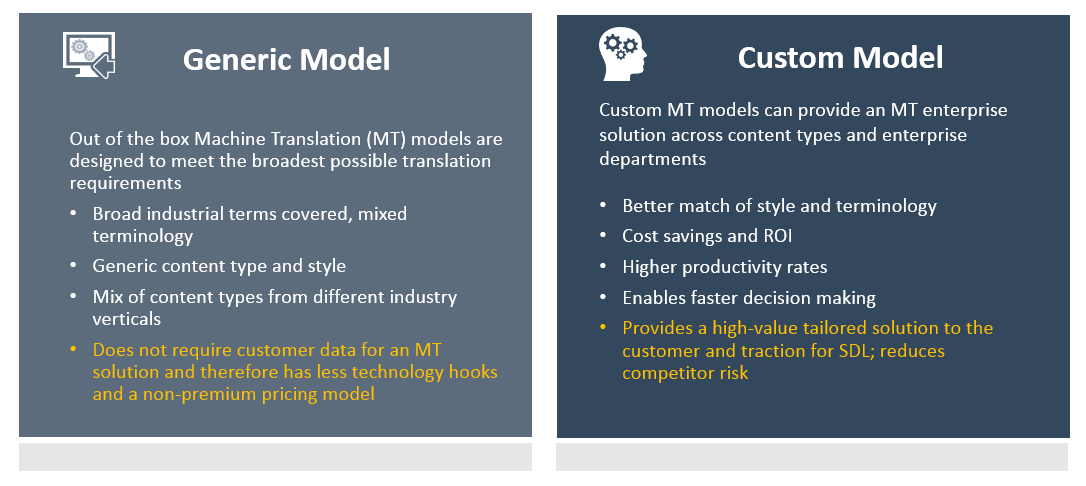

To bring this content aim to fruition via an MT driven strategy, it is essential to have systems specifically primed for enterprises. Localization managers own a very valuable asset in the form of Translation Memories (TMs). TMs deliver huge savings as well as brand consistency for the enterprise, but as needs and objectives shift, the true value of TMs moves from creating savings to becoming one of the integral building blocks of Linguistic AI. With access to quality TM data, localization managers can drive a very powerful NMT solution based on custom models that will reflect the enterprise’s voice, terminology and brand, leading to improved user engagement and end user experience.

From cost center to “value add” content strategists

Enterprise localization departments are often regarded as cost centers and are subject to much scrutiny when it comes to articulating value. Redefining the Loc department as more than a cost center and extending its value will lead to a new thinking that does not focus solely on on-time and on-budget delivery but enables strategic decisions on where and how to spend time and budget. Underpinned by data and metrics, a strategy can emerge that looks to reuse and collaborate on content, enabling an understanding of true Loc spend against ROI.

To this end, Artificial Intelligence and machine learning now offer a plethora of solutions in the Natural Language Processing (NLP) space, from Natural Language Understanding (NLU) to Natural Language Generation (NLG) and machine translation. These are fast becoming ubiquitous and give us the ability to sift quickly through content to determine its value and extract and deliver the right insights using MT or MTPE.

There are a lot of companies offering to help solve the challenge of understanding, generating and translating content. However, it is certainly not easy to manage the necessary array of tools, processes and technologies. Very few companies have a truly holistic view of content and the technologies that enable it.

Hai is our advanced linguistic artificial intelligence assistant resulting from over 15 years of AI research, development and implementation. It has been uniquely created for language and content professionals working across the content cycle. Hai opens up new possibilities for people working with content by removing inefficient, repetitive tasks and freeing up writers and translators to use their time where it matters most. This makes it easier to scale, personalize and perfect content.

From conveyor belt to content knowledge hub

The central idea is to transform localization departments from a conveyor belt to a content knowledge hub, capable of advising and guiding on how to best localize and use content, bearing costs and ROI in mind. Of course this means training and an outcome-oriented rather than a task-focused approach. As we move towards an AI future we will need to keep hold of one of the most important aspects of language which is communication. In localization, content must be local or personal when speaking globally. We need to understand what our customers feel and say rather than creating content based on what we think they should be feeling or saying or what we would like them to know. This is a great opportunity to harness and direct two of the greatest enterprise assets, data and content and use them to innovate and connect. By placing the right tools in the right hands enterprises can achieve a better balance between content growth and customer reach.